Semantic Search vs. Vector Search: How LLMs Are Redefining Relevance?

Search has always been the gateway between intent and information

In B2B marketing, marketers need trusted answers with mapped intent, not shallow keyword matches. Traditional search engines were built around lexical matching and link popularity, but today’s buyers increasingly consult AI platforms that summarise, compare and recommend suppliers in real time.

Generative engines compress the research journey into a handful of conversations, and they depend on large language models (LLMs) grounded in up‑to‑date data. When these models interpret and reshape queries using embeddings, new search paradigms emerge.

Understanding the key difference between semantic search and vector search is therefore critical for CMOs who want to ensure their brands remain visible and relevant in an AI‑first world.

This blog article discusses our proprietary analysis of emerging research and enterprise practices. It explains the underlying mechanics of semantic and vector search, shows how LLMs redefine relevance, examines shifting buyer behaviour and outlines the strategic adjustments needed for B2B marketers.

The goal is not to overwhelm with jargon but to provide actionable insight for leaders tasked with guiding their organisations through the most profound transformation of search since the arrival of Google.

What separates semantic search from vector search?

Both semantic search and vector search aim to deliver more meaningful results, yet they operate in distinct ways.

Semantic search uses linguistics and world knowledge to interpret the intent behind a query. It leverages techniques such as knowledge graphs, entity recognition and natural language processing to infer relationships and context beyond exact words.

Vector search, on the other hand, converts text, images or audio into multi‑dimensional vectors - arrays of numbers that capture similarity within a high‑dimensional space. Instead of comparing keywords, the system calculates vector distances to determine relevance.

The following table highlights key differences between the two approaches:

Semantic search excels when the goal is to infer meaning across diverse inputs and provide context‑rich answers. Vector search is better suited to quickly finding similar items within large, unstructured datasets.

Practically speaking, the two approaches complement each other. Vector embeddings power semantic matching at scale, while semantic techniques refine the interpretation of user intent and help structure results.

How do LLMs redefine relevance?

Large language models have rewritten the rules of search by bridging the gap between retrieval and generation. Traditional keyword search returns a ranked list of documents. LLMs interpret queries, retrieve relevant snippets using vector embeddings and then compose an answer in natural language. This pattern is known as retrieval‑augmented generation (RAG).

In RAG, queries are broken into chunks, converted into embeddings and compared against a vector database containing corporate knowledge, product information or industry reports.

The most relevant passages are returned to the LLM, which grounds its response in factual data and reduces hallucinations. Because embeddings encapsulate semantics, RAG systems understand synonyms and context; they also allow the model to access fresh information without retraining.

This shift towards RAG means marketers must think beyond keywords. Pages are no longer evaluated solely on the prevalence of target terms but on the quality and completeness of the information they contain.

Modern engines break content into passages and compute cosine similarity between embeddings and queries. Each passage must deliver value independently because LLMs assemble answers from multiple sources. Structuring information with clear headings, lists and answer‑oriented paragraphs increases the likelihood that your content will be selected for an AI response.

Why should marketing leaders care about vector search and semantic search?

Vector search is not just a technical tool for engineers; it is a strategic capability that underpins modern marketing data strategies. The dimensionality of vector databases provides LLMs with access to up‑to‑date product specifications, pricing and campaign data. When combined with RAG, this enables consistent messaging across AI‑powered customer touchpoints without retraining models. For example, an AI assistant supporting customer service can instantly retrieve current promotional details, align responses with active campaigns and reduce escalation times. Marketers can use vector search to personalise experiences by matching buyers with content that fits their intent rather than the exact terms they typed.

Semantic search complements this by understanding query context and linking related concepts. In enterprise environments, semantic search helps knowledge workers find answers across languages and domains, reducing search time and boosting productivity.

Research shows that moving from keyword search to semantic embeddings can improve search relevance for ambiguous queries by about 20%, reduce ticket volume by 40%, and increase user satisfaction. These gains translate directly into operational efficiency and better customer experiences.

What is happening to buyer behaviour in the AI search era?

Generative AI is reshaping how B2B buyers discover, shortlist and evaluate suppliers. Our internal analysis shows a clear behavioural pivot. A significant share of business buyers now turn to generative AI more frequently than to conventional search when researching vendors, and a large majority rely on conversational AI assistants as much as, or more than, traditional search engines during evaluation.

The shift is even stronger in technology categories, where adoption of AI-driven discovery tools is approaching universal levels. In first-world countries such as the United States, close to half of buyers already use generative AI as a primary source for vendor discovery, with large enterprises showing nearly twice the dependence compared to mid-sized firms.

This behavioural change compresses the research phase in ways that were not possible earlier. Buyers are entering conversations significantly better informed. Only a small fraction performs minimal research before contacting vendors.

Most conduct structured comparisons long before any sales interaction, often narrowing an initial pool of five to eight vendors down to a shortlist of three or fewer without ever visiting a website or speaking to a representative.

As generative systems synthesise product reviews, pricing data, case studies and industry commentary into a single narrative, brands that fail to appear in these AI-generated summaries simply disappear from the decision set.

The tradeoff, however, is structural. As more users receive complete answers from generative systems, fewer click through to websites. Click-through rates decline sharply when AI summaries sit above search results, and forward-looking models indicate that organic traffic could fall by as much as half in the coming years if brands do not optimise for AI visibility.

What strategies do marketers need for AI‑first search?

As AI search tools compress the funnel and shift discovery into conversational interfaces, marketers must adapt their content and data strategies. The following guidelines derive from our analysis of best practices across B2B marketing agencies and enterprise deployments:

- Engineered passages and structured content – Break long articles into semantically complete passages with clear topic sentences, bullet lists and Q&A sections.

- Each block should answer a specific question so that LLMs can assemble answers without additional context.

- Use descriptive H2 and H3 headings to signal content hierarchy and help AI models identify relevant passages.

- Avoid walls of text; instead, use lists and tables to convey information succinctly.

- Topic clustering and entity‑rich language – Build clusters of related content that demonstrate topical authority.

- Use consistent terminology aligned with industry knowledge graphs to help AI systems recognise entities and relationships.

- Map out secondary and related queries that AI engines may generate (so‑called “query fan‑out”) and address them within your content through comparative analysis sections, alternative solution coverage and FAQs.

- This broadens the pool of retrieval vectors and increases the likelihood that your brand will be cited across a range of user intents.

- Optimise for AI citation and zero‑click visibility – Traditional SEO metrics need expansion. Success increasingly depends on how often your content is cited by AI platforms and featured in AI‑generated answers.

- Place essential facts and figures early in your copy and use structured data markup where applicable to improve answerability.

- Authoritative, well‑formatted passages with clear claims and evidence are more likely to be extracted by AI engines.

- Invest in vector databases and knowledge management – Deploy internal vector search to unlock value from your knowledge base. By converting support articles, product manuals, research papers and marketing assets into embeddings, you make them discoverable by both humans and AI.

- Prioritise trustworthy, first‑party data – LLMs look for signals of expertise, authority and trust. Offer unique research, case studies and proprietary statistics to ensure your brand becomes a primary source.

- Marketing teams should treat media coverage and first‑party research as generative search channels: the narratives created by journalists and analysts heavily influence how AI models frame your brand.

- When harmful or inaccurate portrayals occur, address them promptly, because AI engines rely on aggregated sentiment.

- Measure AI visibility and reputation – Monitor how different AI platforms answer key questions about your business.

- Variance across engines is significant; one model may highlight your strengths while another may ignore you or repeat outdated information.

- Use audits to track whether your brand appears in ChatGPT, Copilot, Gemini or Perplexity responses and adjust your content strategies accordingly.

SEO is not dead, but it is evolving

Conventional practices such as technical optimisation, keyword‑focused metadata, internal linking and conversion testing still matter because search engines remain a major traffic source. However, generative AI is redefining what it means to be discoverable. AI search not only summarises information from top‑ranking pages but also pulls from across the web to construct answers.

As more users accept AI as their primary discovery tool, marketers must expand beyond “search engine optimisation” toward “answer engine optimisation” and “generative engine optimisation.” These approaches encompass structuring content for passage‑level retrieval and embedding, ensuring that each narrative fragment can be discovered and reassembled by AI.

They also involve continuously updating information so that AI models retrieve the latest data rather than outdated copies. The cost of ignoring AI optimisation is steep, as shown by prominent brands whose organic traffic plummeted following algorithm updates and AI‑driven results. Conversely, brands that adapt early can capture high‑intent traffic from AI citations and build authority in emerging generative channels.

How can proprietary data drive AI‑ready marketing?

One of the most powerful levers available to B2B organisations is their own data. Internal documents, research reports, CRM notes and service tickets contain deep insights into customer needs and product performance. By implementing internal vector search, companies can transform these assets from static archives into dynamic engines that feed AI copilots.

The process involves auditing existing content, selecting a suitable vector database and embedding model, vectorising all documents, and building intuitive search interfaces. Monitoring retrieval accuracy and user feedback ensures continuous improvement.

Real‑world results are compelling. Enterprises have leveraged internal vectors to supply private LLMs with context for drafting proposals and marketing copy, thereby shortening production cycles. These examples illustrate that vector search is not merely a customer‑facing tool; it is an organisational capability that accelerates knowledge sharing and powers new applications like retrieval‑augmented generation.

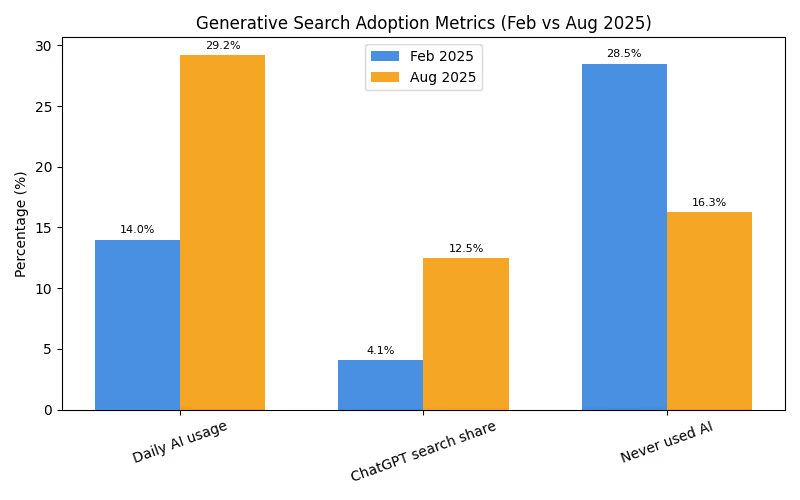

Visualising the pace of adoption

To understand how quickly generative search is reshaping behaviour, consider the following comparison of key adoption metrics between early 2025 and mid‑2025. We observed that daily AI tool usage doubled, the share of searches handled by ChatGPT tripled, and the proportion of people who had never used AI shrank dramatically. The chart below demonstrates this shift.

The steep climb in adoption underscores the urgency for marketers to integrate AI visibility into their core strategies. Waiting for annual SEO reviews is no longer viable given how quickly audience behaviour changes. (source link)

AI‑driven search has moved from novelty to necessity

Semantic and vector search each play vital roles in this shift: semantic search interprets intent and context, while vector search enables similarity matching at scale. Together, they power retrieval‑augmented generation (RAG), allowing LLMs to deliver grounded, conversational answers.

B2B buyers are embracing these tools, compressing research cycles and arriving at decisions with more information than ever before. Brands that adapt by structuring content for passage‑level retrieval, building internal vector databases and investing in first‑party research will capture disproportionate value.

For CMOs, the message is clear: treat AI search as an extension of your marketing infrastructure, not an experiment. Relevance is no longer defined solely by keywords; it is determined by how well your data aligns with user intent and how easily AI models can access and summarise your knowledge.

With the right strategy, the combination of semantic and vector search becomes a powerful advantage, one that improves customer experience, drives efficiency and ensures your brand remains discoverable in a world where answers come before clicks.

What is AI Search Fusion: How Is It Changing SEO Forever?