Understanding Fan-Out Queries: How Google's Gemini Breaks Down Complex Prompts?

Large language models are changing how people search for information, with big implications for B2B marketing. Instead of simply matching keywords to web pages, AI search engines like Google’s Gemini break each query into a series of smaller questions, run many searches in parallel, and then synthesize the results into a single answer.

This process, known as fan-out queries, rewrites the rules of search. If your content isn’t designed for this new environment, you risk invisibility when prospects turn to AI-powered tools.

In this blog article, we unpack what fan-out queries are, how Gemini actually breaks down complex prompts, and what that means for your B2B marketing strategy.

Whether you’re a CMO or a seasoned marketer, you’ll learn how to align your content with the way AI thinks, so your brand appears in the answers your prospects see.

What are fan-out queries, and why do they matter for B2B marketers?

Fan-out queries occur when an AI system takes your single question and expands it into multiple related sub-queries. Each branch captures a different angle of the original intent, and the AI then pulls information from each branch to create a comprehensive response.

For example, a simple prompt like “best CRM software for small businesses” could fan out into sub-queries such as “affordable CRMs”, “features comparison for small business CRMs”, “integration options for CRM and accounting software”, and “free trial CRMs”. Instead of one keyword per page, the model now interprets the question holistically, covering categories, features, pricing, and more.

In B2B, buying decisions often involve multiple stakeholders. Finance wants pricing clarity, IT checks integration and security, operations evaluates workflows, and executives focus on ROI.

AI search mirrors this committee-style dynamic, transforming one query into a set of questions that reflect each stakeholder’s concerns. If your content doesn’t answer those deeper, stage-specific questions, AI tools will pull answers from someone else.

How does Google’s Gemini break down complex prompts?

Gemini’s fan-out process begins by understanding the user’s intent. The system classifies the query type (short fact, reasoning question, or comparison) and determines the answer format. For complex prompts, Gemini uses a large language model to generate dozens of synthetic sub-queries. Each one explores a different aspect of the original request. It then retrieves live content from the web for each sub-query and passes those results through several processing steps:

- Retrieval-Augmented Generation (RAG): Gemini chunks each document into smaller passages, embeds them as vectors, and performs dense retrieval to find the most relevant snippets.

- Ranking and reranking: Using techniques such as reciprocal rank fusion and neural reranking, Gemini filters sources based on freshness, authority, intent alignment, and diversity.

- Reasoning and synthesis: The model constructs a structured answer by identifying key claims, grounding them in retrieved passages, resolving conflicts and selecting specialised sub-models where needed.

- Citation and response generation: Gemini attaches citations to specific claims, integrates text with multimodal elements (e.g. images or charts) and tailors the presentation based on the user’s context.

The scale of this process is significant. Research on Gemini 3 shows it averages 10.7 fan-out queries per prompt, with some prompts spawning up to 28 sub-queries. These fan-outs are long-tail, averaging 6.7 words and often include brand names (26.4% of queries), dates (21.3%) and detailed comparisons. Most queries (95%) have zero search volume in traditional keyword tools, indicating they are highly specific and tailored to the user’s situation.

Gemini also issues hundreds of searches for deep research tasks and uses internal datasets, such as Google Finance and the Shopping Graph, to incorporate real-time information. In essence, Gemini is not just a chatbot; it’s a sophisticated orchestrator of many micro-searches, merging structured and unstructured data to provide a custom answer.

The shift from traditional search to AI retrieval

Traditional search focused on ranking webpages for a single keyword. Get your page onto the first results page, and you will be rewarded with clicks. AI search changes the game. AI Mode and the upcoming Web Guide retrieve results for the seed query and the AI-generated fan-out queries, then cluster them into thematic groups. Instead of a linear list of “10 blue links”, users see clusters like “pricing breakdown”, “product comparisons”, and “integration options”, each accompanied by a short AI-generated summary.

For B2B marketers, this shift means two things:

Inclusion matters more than rank. If your content isn’t retrieved and clustered under any relevant sub-query, you’re invisible. Ranking first for a single keyword doesn’t guarantee a mention in the AI answer.

Context determines visibility. AI systems evaluate not just a page’s overall relevance, but how well specific passages answer a variety of sub-questions. Content that addresses multiple angles, features, pricing, integration, ROI has a better chance of being cited.

How does fan-out mirror the B2B buyer’s journey?

A single person rarely makes modern B2B purchases. More than 95% of B2B deals involve four or more stakeholders. Each person has a different question: finance asks about the total cost of ownership, IT checks data security and integration, operations looks at workflows and adoption, and sales enablement wants to understand user training. Query fan-out replicates this committee search by breaking a single query into a spectrum of angles.

Here are some examples that illustrate how fan-out aligns with buyer-stage concerns:

- SaaS (CRM platforms): An initial search for “best CRM for enterprises” spawns sub-queries like “Salesforce vs HubSpot pricing breakdowns” (finance angle), “HubSpot integrations with ERP” (IT angle), and “user adoption rates for Salesforce vs HubSpot” (sales enablement angle).

- Wholesale or Distribution (inventory management): A query about “best inventory software” branches into “Cin7 vs NetSuite inventory workflows” (operations angle), “cost of scaling NetSuite licenses” (finance angle), and “integration with Shopify or Magento” (IT or marketing ops angle).

- Fintech (ERP and compliance): “Top ERP systems for compliance” fans out to “ERP compliance and security features” (IT or compliance angle), “cost of maintaining SOX compliance in ERP systems” (CFO angle), and “integration with Tableau for analytics” (operations or analytics angle).

These are precisely the sub-queries buying committees ask. If your content covers only a high-level overview, AI will pull more detailed answers from other sources. To be visible throughout the buyer’s journey, you need assets that address each stakeholder’s concerns.

Optimizing your content for fan-out queries in B2B marketing

How do you adapt your SEO and content strategy for fan-out queries?

Here are some steps for your SEO & content team to consider while drafting content for the AI overview, AI mode and LLM models -

- Build topic clusters and comprehensive coverage - Identify your core topics and build topic clusters around them. A topic cluster consists of a central pillar page that provides a broad overview and several cluster pages that dive into related subtopics. For instance, a “CRM for enterprises” pillar page could link to cluster articles about pricing comparisons, integration guides, user adoption tips and ROI calculators. This structure signals to AI that your site covers the breadth and depth of a subject.

- Write modular, entity-rich content - AI systems process content in chunks, so each section should answer a specific question and stand on its own. Use clear headings, concise summaries, and bullet points where appropriate. Incorporate entity-rich language, mention relevant brands, products, technical terms, and contextual details. This helps AI understand relationships and improve passage-level retrieval.

- Incorporate schema and structure - Implement FAQ and How-To schema markup to make your content easier to parse. Use comparison tables, lists, and definition blocks for succinct answers. Include statistics, data points and verifiable claims to improve the likelihood of being cited. Update content frequently with visible publish and update dates; Gemini’s fan-out queries often include years, reflecting a preference for fresh content.

- Mine data sources for sub-query ideas - Use tools like People Also Ask, keyword research platforms, and chat prompts to expand your seed queries. Examine internal search logs, chat transcripts and sales or support feedback to capture real language and pain points. Monitor forums like Reddit and professional communities for naturally phrased questions. Overlay these sources to identify high-value subtopics that AI models are likely to generate.

- Design bottom-funnel assets - AI models prioritize buyer-stage content over generic top-of-funnel material. Create comparison pages, case studies, ROI breakdowns, integration guides and detailed product demos. These assets answer the deeper questions decision-makers ask and can make or break your visibility when the AI synthesizes results.

- Strengthen your credibility - Differentiate your content by showcasing proprietary data, benchmarks and real-world results. Instead of repeating what everyone else says, include first-hand interviews, original research or unique process insights. AI systems favour trustworthy, authoritative sources and will prioritize content that brings something new to the table.

- Interlink comprehensively - Use internal links with contextual anchor text to tie related topics together. This not only improves user navigation but also signals the depth of the topic to AI systems. Think of your content as a knowledge graph, the more connections you make, the easier it is for AI to follow the logic across different subqueries.

Comparing Buyer Stages and Fan-Out Content

Here is a table outlining the kinds of queries AI engines generate at each buyer stage and the content types that best serve those sub-queries. Use this as a checklist when planning your topic clusters. Replace “Screenshot placeholder” with your own screenshot showing how a cluster is retrieved or how an AI summary looks.

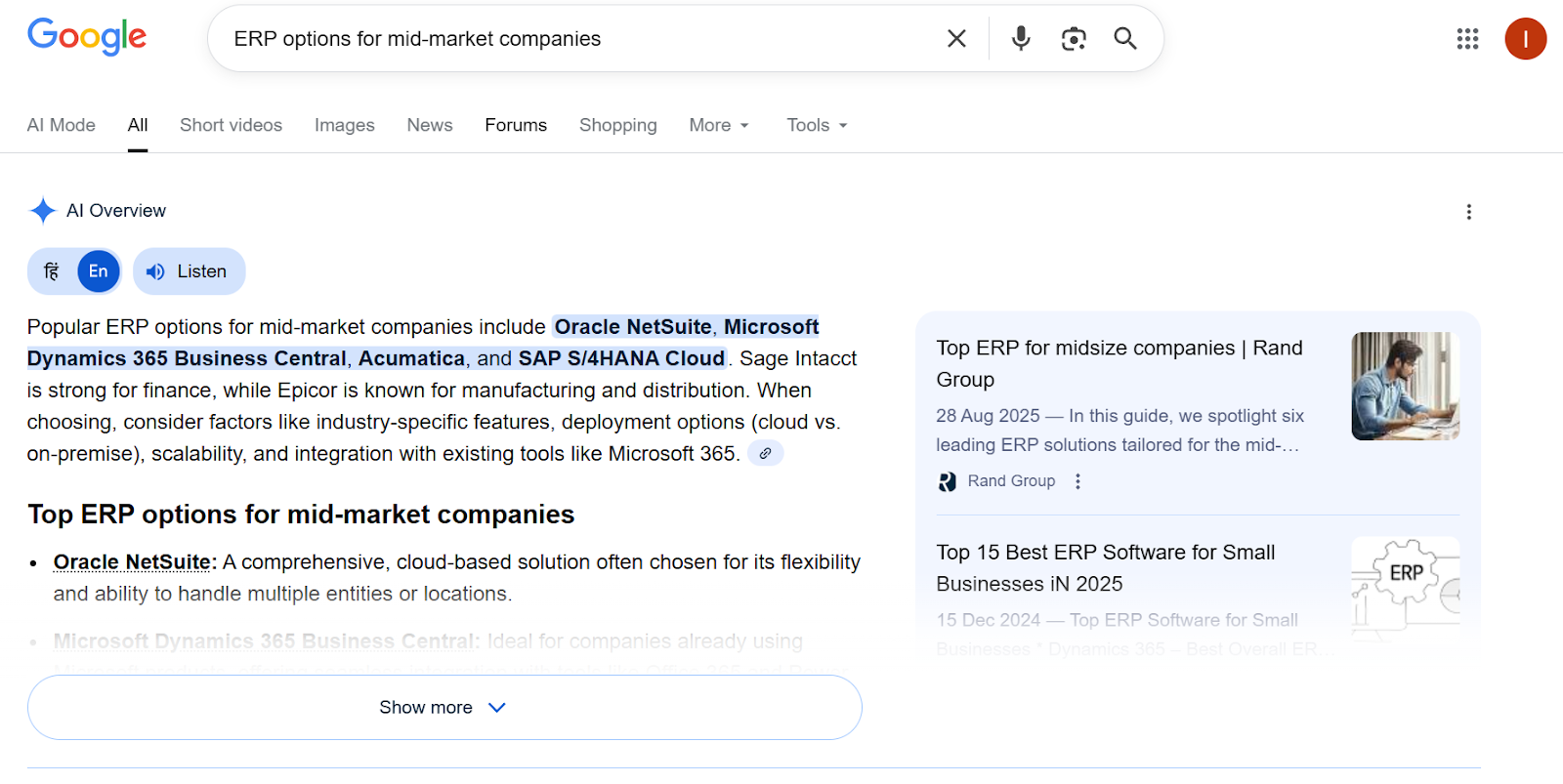

This is a search query, “ERP options for mid-market companies”, and this is how Google’s AI clusters the results into a structured overview.

Which metrics and insights can inform your fan-out strategy?

To manage your fan-out optimisation effectively, you need to track metrics that reflect how AI systems operate. Below are insights from recent research that you should monitor:

Number of fan-out queries per prompt, Gemini 3 averages 10.7 sub-queries per prompt, while earlier versions averaged around 6. The higher the number, the more nuanced the AI’s understanding of intent.

- Length of sub-queries: Fan-outs average 6.7 words, reflecting long-tail specificity. This is a signal that the AI is probing for fine-grained details.

- Brand inclusion: 26.4% of fan-out queries contain a brand name. Getting your brand mentioned in these queries increases your chances of citation.

- Date inclusion: 21.3% of fan-out queries include a year. Publish and update dates matter. Keep your content fresh.

- Search volume: 95% of fan-out queries have zero search volume. Don’t rely solely on keyword tools; instead, focus on intent and context.

Key fan-out query metrics from our analysis of Gemini’s retrieval behaviour. (source: Seer Interactive’s Gemini Fan-Out Research Dataset)

Build your proprietary knowledge advantage with caselets and examples

Standing out in AI search isn’t just about covering the right topics; it’s about offering unique proof and perspective. B2B buyers want to see real results, not generic advice.

To build your proprietary knowledge advantage, focus on these important factors:

- Original research

- Conduct surveys, run experiments, or analyse customer data.

- Publish findings on trends, benchmarks and best practices.

- Use data that highlights real performance impacts, such as how CRM features affect sales cycle length.

- Conduct surveys, run experiments, or analyse customer data.

- Case studies with numbers

- Go beyond generic success stories.

- Include quantifiable results like “Integration reduced onboarding time by 35%”.

- Add supporting visuals, charts, or workflow diagrams.

- Go beyond generic success stories.

- Expert commentary

- Interview internal subject matter experts, partners or customers.

- Use their firsthand insights to build depth and authority.

- Highlight practitioner language that AI systems recognise as credible.

- Interview internal subject matter experts, partners or customers.

- Proprietary frameworks

- Develop your own models or methodologies for common industry challenges.

- For example, a four-stage framework for CRM implementation.

- AI engines look for novel, structured approaches and reward them in synthesis.

- Develop your own models or methodologies for common industry challenges.

How to measure the impact of fan-out on SEO and lead generation?

AI search behaviour is still evolving, there is no definitive dashboard for fan-out success. However, you can start with a clear measurement framework:

- Simulate AI sub-queries

- Prompt AI models directly to uncover which sub-queries they generate for your core topics.

- Identify coverage gaps that limit AI citations.

- Prompt AI models directly to uncover which sub-queries they generate for your core topics.

- Audit content depth

- Map your assets across Awareness, Consideration and Decision stages.

- Identify missing formats such as comparisons, ROI pages, or integration explainers.

- Map your assets across Awareness, Consideration and Decision stages.

- Track AI visibility

- Monitor how often your brand appears in AI Overviews and conversational results.

- Review brand mentions, sentiment and share of voice across AI surfaces.

- Monitor how often your brand appears in AI Overviews and conversational results.

- Measure lead quality

- Track progression of AI-generated leads through the funnel.

- Compare conversion and close rates for prospects who interacted with bottom-funnel assets.

- Track progression of AI-generated leads through the funnel.

- Iterate and refine

- Update pages regularly to reflect new data and product changes.

- Experiment with new formats, add depth, and watch shifts in AI citations.

- Update pages regularly to reflect new data and product changes.

Embracing AI search and staying ahead in B2B marketing

The emergence of fan-out queries signals a fundamental change in search that B2B marketers can’t ignore. Gemini and similar AI models break down each question into a network of sub-queries, search across the web, and synthesize results to answer complex buying questions.

This mirrors the B2B buying journey, in which multiple stakeholders have distinct concerns. To remain visible and influential, brands must align their content with how AI engines think, covering core topics comprehensively, offering detailed comparisons, ROI analyses, and proprietary data, and updating content regularly.

By embracing fan-out optimization, you position your brand not just to rank in AI search, but to be cited as a trusted authority.

Start by mapping out the sub-queries AI engines generate, build content assets across buyer stages, and measure your visibility in AI results. The brands that master this new landscape will lead the conversation when prospects search for answers.

.jpg)

The Real Reason Answers Change in LLM-Based Search and What Marketers Should Do About It?

Why Good Content Fails in AI Search and What Fan Out Has to Do With It?

.png)