How Should You Structure Information in RAG so Retrieval Never Fails?

AI has practically entered every field and industry, and it continues to impact every repeatable process and improve efficiency. Still, there are certain compliance challenges when it comes to the ethical use of AI, and how can we make it trustworthy? Retrieval-Augmented Generation (RAG) was built to answer that. It can make AI factual, consistent, and context-aware. However, RAG only performs as well as the information it retrieves. If your knowledge is fragmented, even the best retrieval system will stumble.

The real challenge isn’t RAG’s architecture. It’s the way businesses store and structure their data. This guide explains how to design, label, and govern information so your retrieval pipelines never fail and your teams stop chasing broken answers.

What is RAG really solving and why do retrievals still fail: foundations and failure modes?

RAG’s job is to ground a model’s response in verified data before it speaks. Yet most failures come not from the model, but from the foundation beneath it.

Here’s where retrieval breaks down:

- Chunk imbalance: Chunks are either too large or too small, so the right fact never lands in the top results.

- Noisy indexes: When unrelated content types sit together without metadata, rankers lose signal.

- Single-mode retrieval: Using one retrieval method misses edge cases and nuanced intents.

- Redundant context: Long context payloads introduce near-duplicates, which confuse the model.

- Weak versioning: Outdated or duplicated content gets retrieved, producing conflicting answers.

Remember: Retrieval quality equals how precisely a user’s query maps to a well-defined unit of knowledge supported by minimal yet complete context.

How should you shape the source information architecture for RAG?

Think of your knowledge base as a product inventory, not a library. Every piece must be discoverable, traceable, and reusable.

1. Define atomic units

Each unit should answer one intent without external references. For policies, it might be a section plus a clause. For product documentation, it’s a feature, a limitation, plus an example.

2. Make the structure machine-obvious

Preserve headings, lists, tables, and captions as separate fields. Never flatten everything into text; machines rely on structure to retrieve meaning.

3. Attach business metadata

Assign filters humans use intuitively: product line, region, persona, edition, version, compliance class, and date of effect. This metadata ensures context precision.

4. Separate content types

Keep FAQs, release notes, and legal text in separate collections. Retrieval accuracy improves when the system first searches within the proper context.

5. Normalize identifiers

Use standardized product names, feature codes, and clause IDs. These act as anchors for both keyword and semantic retrieval.

6. Record provenance

Each chunk must include its source URI, title, author, timestamp, and version. Governance begins with traceability.

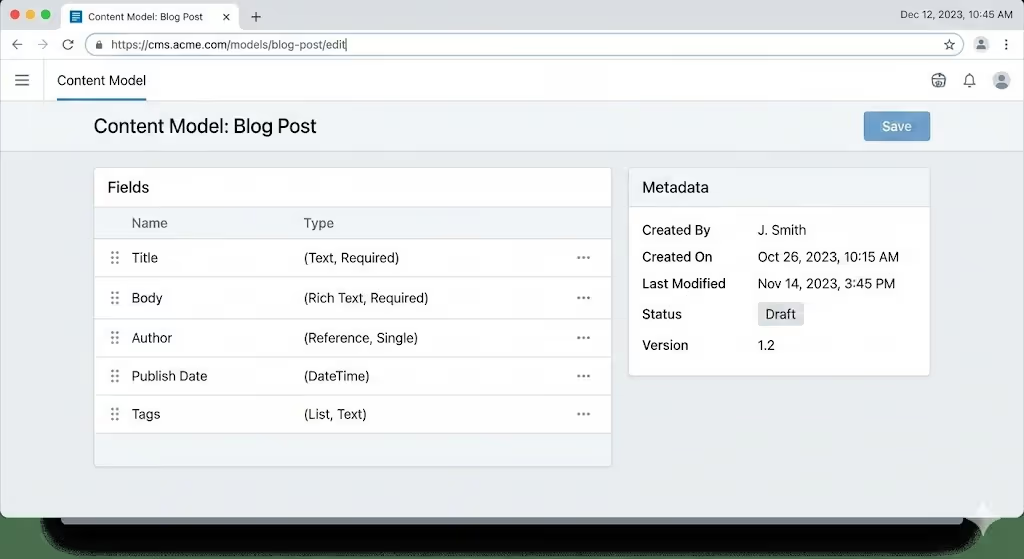

Example Content Model with Fields and Metadata

Chunking best practices for RAG

Chunking defines what your retriever can find. Poor chunking breaks even the best models.

1. Start with structure-aware splits

Split based on logical sections or headings first. Only use token-based splits when no structure exists.

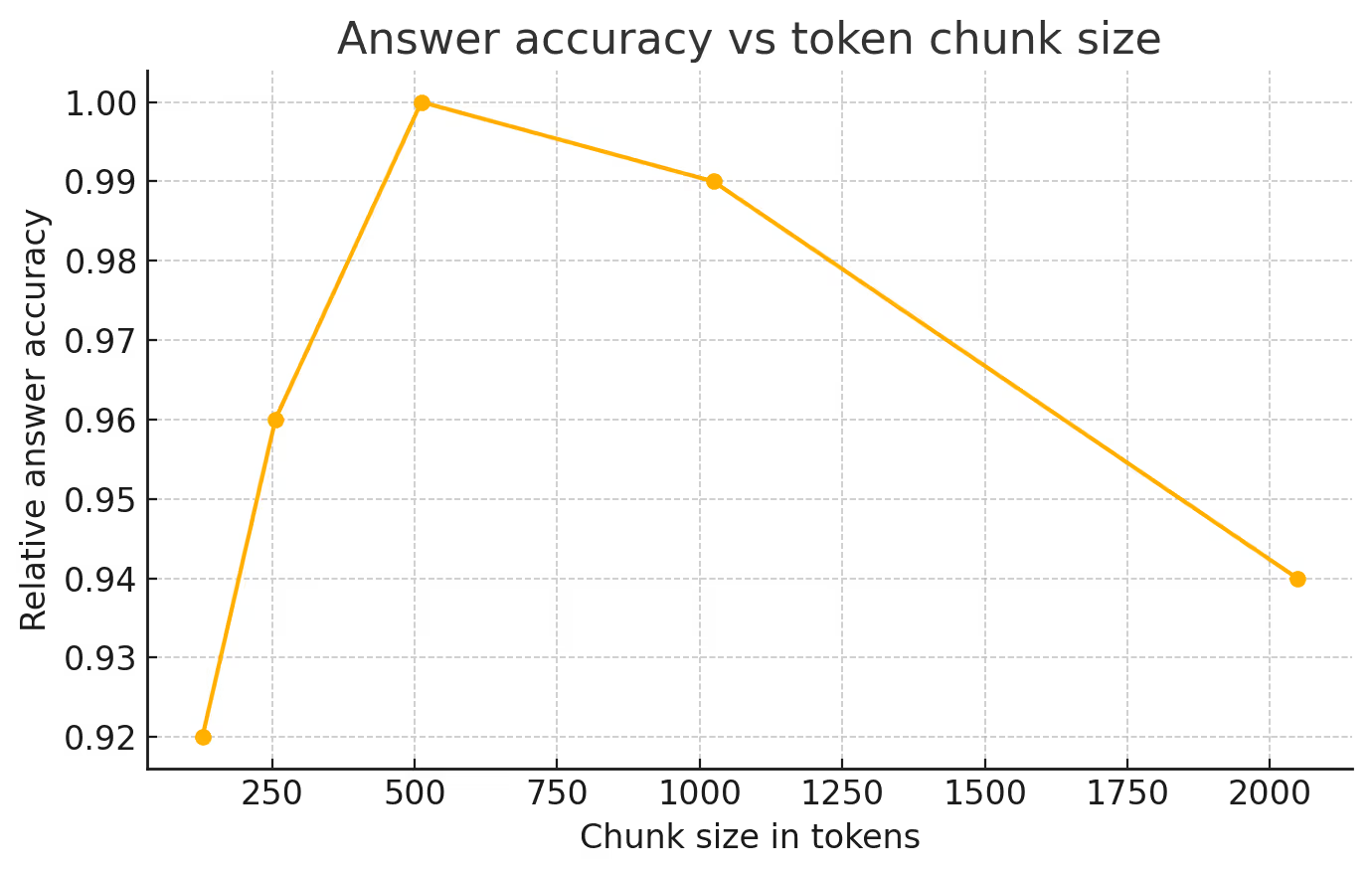

2. Target mid-sized chunks

Most enterprise data performs best between 512–1024 tokens. Small chunks lose context. Large ones bury answers in noise.

3. Use controlled overlaps

A slight overlap helps preserve continuity where information crosses boundaries, but keep it minimal to avoid redundancy.

4. Apply late or semantic chunking for long documents

When documents are highly connected, embed the entire document context before chunking. This prevents the model from pulling disjointed facts.

The chart reflects the common pattern: answer accuracy peaks at mid-sized chunks, consistent with multiple public evaluations, then tapers at the extremes.

How do embeddings and metadata reinforce each other: hybrid indexing and attribute filtering

Dense embeddings capture meaning, while metadata enforces business logic. Together, they build precision.

1. Pre-filter with metadata

Filter by region, product, language, and version before vector search. This narrows the search to only relevant content.

2. Post-filter with policy

After retrieval, apply privacy or entitlement checks before sending results to generation.

3. Follow schema discipline

Keep normalized fields, index numeric and date types separately, and always tag versions clearly.

Insert Screenshot: Vector Index Schema with Typed Metadata and Filters

When should you blend keyword and semantic search: hybrid retrieval that wins more often

RAG succeeds when it can balance literal precision with semantic understanding. No single method does both.

1. Default to hybrid in production

Use keyword search for names, IDs, and numbers. Use vector search for meaning and paraphrase detection.

2. Fuse results smartly

Reciprocal Rank Fusion (RRF) works best when you lack labels. Linear fusion is stronger when you can tune weights from real data.

3. Tune for intent

Exact, compliance-heavy queries should lean on sparse retrieval. Exploratory queries should emphasize semantic weight.

How do you rank without bias while keeping speed: retriever plus reranker stacking

A two-stage retrieval model is reliable and scalable.

Stage 1: Fast retrieval

Fetch top candidates quickly using sparse or dense methods with metadata filters.

Stage 2: Reranking

Run a cross-encoder reranker to score candidates in the query context. This improves ranking quality but adds predictable latency.

Keep these guidelines in mind:

- Keep k small to maintain speed.

- Cache reranker scores for frequently used content.

- Default to heuristic scoring during traffic spikes.

What context window should you target for different tasks?

Every token costs. Only include what strengthens the answer.

- Deduplicate aggressively. Remove repeated headers, footers, or boilerplate text.

- Prioritize citations. Use minimal context around the exact answer span.

- Order by confidence. Put high-relevance spans first; models weigh them more heavily.

- Control for drift. Always prefer the latest version and indicate if a policy has changed.

How do you keep retrieval fresh at scale: governance, versioning, and drift control

RAG maturity isn’t about model choice; it’s about operational hygiene.

- Version everything. From raw docs to embeddings, maintain lineage.

- Automate re-embedding. Refresh vectors when tokenisers or models evolve.

- Monitor retrieval health. Track recall, nDCG, and precision regularly.

- Secure by design. Apply metadata-based permission filters and log every retrieval event.

Which metrics should a CMO watch to prove ROI: KPIs and a simple control plan

CMOs don’t need the pipeline diagram; they need measurable impact.

Core Retrieval KPIs

- Recall@K for top intents

- nDCG@K for main corpus

- First-answer correctness

- Time to first token and overall latency

Operational KPIs

- Corpus coverage across products and markets

- Content freshness lead time

- Percentage of answers referencing current versions

- Escalation deflection rate and time saved

Control Plan

Assign each KPI an owner and review weekly. Use a short quality board of 10 core queries per corpus to track drift. Ship changes only with proven performance deltas.

Which retrieval stack belongs in your environment: one table to decide?

The table shows that hybrid retrieval is the most reliable baseline, while reranking adds precision where latency is acceptable.

Implementation checklist that rarely fails

Here is a checklist your team can use to make retrieval precise, consistent, and ready for production at scale. Follow these steps, and you’ll never have to patch a broken RAG pipeline again -

- Define atomic units and attach business metadata.

- Use structure-aware chunking with overlaps only when necessary.

- Index with both vectors and keywords, then fuse rankings.

- Add a reranker for high-value tasks and cache frequent hits.

- Pack context by evidence order and deduplicate.

- Version everything and monitor retrieval metrics regularly.

- Re-embed on every model or schema change with rollback ready.

Building Retrieval That Scales With Confidence

RAG success doesn’t come from bigger models. It comes from a better structure. When your data is clean, contextual, and version-controlled, every retrieval strengthens the business instead of adding noise. The goal isn’t just accurate answers. It’s consistent intelligence that lets leaders act faster, and teams work with clarity.

What is AI Search Fusion: How Is It Changing SEO Forever?