A Complete Guide to Chunk Optimisation and Index Planning in B2B Marketing

Artificial intelligence has changed how marketing teams discover and leverage information. Instead of only relying on search engines, modern systems blend large language models with relevant content from your own knowledge base. This is often called retrieval‑augmented generation, or RAG.

For marketing leaders, RAG promises that you can feed an AI assistant with your brand guidelines, product data and campaign history and expect well‑crafted answers in your voice.

However, the quality of the answers hinges on two disciplines that many marketers are only now learning about: chunk optimisation and index planning. These processes determine how your source material is broken down into manageable pieces, how those pieces are stored, and how they are retrieved when the model responds to a question. If you ignore them, you risk turning your AI assistant into a scatter‑brained intern. If you get them right, you gain a trusted teammate who produces accurate, context‑rich marketing insights.

This complete guide answers real questions marketers ask about chunk optimisation, shows how to optimise content chunks for AI, explains how to plan your index, and provides a roadmap to build an AI‑ready knowledge base. The goal is to empower you to work smarter and deliver content that resonates with senior decision-makers.

What does chunking mean?

Chunking is the process of dividing a piece of text into smaller, meaningful units. In the context of AI‑driven marketing, chunks are created so that large language models (LLMs) and vector databases can handle your data efficiently.

Modern models have a limited context window. They cannot process entire reports or white papers at once, so we need to feed them snippets that preserve the essence of each topic. When you ask a question, the system retrieves and embeds the most relevant chunks into the prompt before generating an answer.

Chunking serves two purposes:

- Meeting technical limits - Embedding models and chat models only accept a certain number of tokens (a token is roughly four characters of text). For example, the text‑embedding‑3‑small model accepts around eight thousand tokens. Splitting documents ensures that each chunk falls under this limit and prevents truncation.

- Improving retrieval - In a vector search, each chunk becomes a point in semantic space. If the chunk contains multiple topics, the embedding becomes an average representation and may fail to match a specific query. Smaller, focused chunks have clearer embeddings and yield more accurate matches.

In the past, search engine optimisation (SEO) encouraged long pages built around high‑volume keywords. Those pages could rank in search results, and search engines used their own algorithms to highlight passages. AI retrieval flips this model.

You must break information into precise, coherent units so that your AI assistant can find the right context without mixing topics. Chunking is not optional; it is the foundation of any AI‑ready knowledge base.

Why traditional SEO does not prepare content for AI retrieval?

Many marketing teams assume that optimising a web page for search engines automatically prepares it for AI search. This assumption fails because search engines and AI retrieval work differently.

Traditional SEO focuses on ranking an entire page. It uses signals such as backlinks, keywords, page structure, and user engagement to determine whether a page appears on the first page of search results. AI retrieval, by contrast, operates at the chunk level. It does not look at your entire blog article; it looks at the individual passages you have prepared.

Search engine algorithms are also resilient to long pages with multiple topics because they highlight the relevant section in the search snippet. Large language models do not. If your content is not properly broken down, the model may embed unrelated sentences together, making it harder for the vector search to match the query.

The result is a generic answer or, worse, an invented fact. This explains why many early attempts at integrating AI chat into websites produced mediocre responses.

Another misconception is that repeating keywords in multiple places helps AI find the content. In vector search, dense keyword repeats create noise because the embedding captures the average of the entire passage.

Instead, the key to AI search is semantic clarity. Each chunk must be about one idea and contain enough context to stand on its own. Removing filler phrases and aligning with user intent matters more than keyword density.

How to optimise content chunks for accurate AI retrieval?

Optimising chunks is both an art and a science. Here are the core principles and strategies:

- Start with a clear document structure

Audit your existing materials and organise them logically. Marketing content often lives in many formats: product sheets, case studies, email campaigns, customer feedback and market research.

Before chunking, gather these assets into a single repository and group them by product line, audience segment and campaign. This step ensures that your AI system can find the right category when retrieving information, emphasising that a well‑organised knowledge base is the foundation of any RAG system.

- Choose the right chunking method

There is no single method for dividing content. The best approach depends on the nature of your documents and the types of questions you expect.

Here are some methods of chunking content:

When in doubt, start with a paragraph or recursive chunking. Paragraphs often capture a single idea, and recursive splitting can break larger paragraphs down using sentence boundaries.

For marketing documents with headings and subheadings (e.g., case studies or long blog posts), document‑based chunking may be appropriate. This method uses inherent structures, such as headings, code blocks, or Markdown markers, to create logical chunks.

- Select an appropriate chunk size and overlap.

The size of each chunk matters. Small chunks (128-256 tokens) provide high retrieval precision but may lack context; large chunks (2,048 tokens) capture more context but dilute the embedding. A series of experiments across multiple datasets found that medium‑sized chunks around 512-1,024 tokens often achieve the best balance. In particular, page‑level chunking, where each page of a document becomes a chunk, achieved the highest average accuracy of roughly 0.65 across diverse datasets.

Overlap helps maintain continuity between chunks. An overlap of 10-25% is common; we observed that starting with 512 tokens and 25 % overlap (about 128 tokens), while Weaviate recommends 10-20 %. A moderate overlap ensures that information near the boundaries is retained without excessive duplication. Test different sizes and overlaps on your own data.

- Preserve semantic coherence

Regardless of method and size, each chunk should make sense when read alone. If a chunk contains multiple unrelated ideas, split it further. If it is too short to provide context, merge it with the next sentence or paragraph.

Always include any headings or labels that help to identify the context. For example, when chunking a case study, include the section heading “Results” with the corresponding sentences. This ensures that the model sees the heading and knows that the chunk relates to results.

- Add metadata for better retrieval

Metadata tags guide the retrieval system to the right context. Label each chunk with relevant attributes such as document type, audience segment, campaign name, product name, publication date, and user persona. Research recommends tagging chunks with attributes such as content type, target audience and campaign association.

During query time, metadata filters enable the system to retrieve only chunks from the relevant product line or region. For example, if a CMO asks about a specific product launch, the retrieval step can filter out chunks from other products, improving precision.

- Use a hybrid retrieval strategy

Vector search is powerful but not perfect. Combining semantic similarity with keyword or metadata filtering often yields better results. For instance, if a query contains a specific product name, a keyword filter ensures that only chunks containing that product name are considered. A hybrid strategy also helps when synonyms or abbreviations vary across documents. You can also implement re‑ranking models to refine results.

- Continuously evaluate and refine

Chunk optimisation is an ongoing process. After deploying your system, collect metrics on factual accuracy, relevance, and user satisfaction. If the AI often fails to find information, adjust chunk sizes, overlap or metadata.

If chunks are too small to provide context, increase their length. If they are too large and include irrelevant details, split them further. Feedback loops and periodic updates keep your knowledge base fresh and ensure that the AI grows with your business.

- Index planning for B2B marketing knowledge bases

Once your content is chunked effectively, you must plan how to organise those chunks in your vector database and search index.

Index planning is the deliberate design of data structures, fields and filters that enable fast and accurate retrieval.

In B2B marketing, index planning directly affects your ability to answer questions about campaigns, customer segments and market trends, here’s how to do it effectively -

Define your retrieval goals

Start by identifying the questions you expect the AI to answer. Do you need to compare marketing campaigns across regions? Analyse sentiment from customer feedback? Summarise product launch performance? The questions define what metadata fields you need. For example, if regional comparisons are important, include a “region” field in the index. If campaign names matter, include a “campaign” field. Clear goals prevent unnecessary complexity and keep your index lean.

Use hierarchical indexes

Not all queries require the same granularity. For broad overviews or summarisation tasks, retrieving an entire document or section may be more efficient than retrieving small chunks. LlamaIndex documentation suggests decoupling chunks used for retrieval from those used for synthesis.

You can achieve this by building a hierarchical index with multiple layers:

- Document summaries – At the highest level, store summaries of entire documents or sections. These help the system quickly identify relevant documents.

- Paragraph or section chunks – In the next layer, store mid‑sized chunks with headings and paragraphs.

- Sentence or semantic chunks – At the finest level, store short, focused chunks for precise retrieval.

When a user asks a question, the system first retrieves relevant documents via the summaries, then drills down to paragraphs or sentences as needed. This approach reduces the search space and improves retrieval speed.

Apply metadata filters and routing

Use metadata filters to narrow down candidates before semantic retrieval. For example, if a query is about a specific product, filter the index to only include chunks tagged with that product. If a question is about a particular audience (e.g., finance executives), filter by target persona. Combining metadata filters with vector search yields more targeted results.

Routing refers to directing a query to the appropriate index or retrieval method based on its intent. Queries that ask for facts benefit from high‑precision sentence‑level retrieval, while summarisation queries work better with larger paragraphs or document‑level chunks. Dynamic routing ensures that the AI uses the most appropriate retrieval strategy for each task.

Plan for scalability

As your knowledge base grows, retrieval performance can degrade if the index is not optimised. Structured retrieval techniques, such as storing document hierarchies and using metadata tags, help the system scale. When indexing large sets of documents, consider pre‑chunking heavy documents and caching frequently accessed results. Use consistent field names and avoid unstructured categories that may confuse the retrieval engine.

This graph summarises a simplified representation of how chunk size affects retrieval accuracy. It demonstrates that moderate chunk sizes strike the best balance between context and focus.

What does a well‑optimised chunk look like?

A well‑optimised chunk is clear, focused and self‑contained. It stays within the recommended token range, preserves the author’s train of thought and includes any headings or metadata needed for context. Consider the following example of a product launch announcement split into chunks:

- Poorly chunked example - The first chunk introduces a new software product, followed immediately by unrelated pricing details and a user testimonial. The second chunk continues with features and technical specifications. Both chunks mix multiple ideas. When a customer asks about pricing, the retrieval system might find the first chunk but also retrieve unrelated information about the product’s purpose. The answer becomes diluted.

- Well‑optimised example - Chunk A introduces the product and explains its purpose and target audience. Chunk B lists the key features in a bullet format. Chunk C provides pricing details and licensing options. Chunk D includes a user testimonial. Each chunk is labelled with metadata such as “product name”, “features”, “pricing” and “testimonial”. When a pricing question arrives, the system retrieves Chunk C. When someone asks for user feedback, the model retrieves Chunk D. The retrieval is precise, and the model has enough context to answer accurately.

- Sample content piece - Imagine a company announcing its new marketing automation platform. The introduction describes the platform’s goal of unifying campaign management, analytics and customer engagement. A separate heading lists three main features: an intuitive workflow builder, AI‑powered segmenting and real‑time reporting. Another section covers pricing tiers, including a free tier for small businesses and enterprise options for large organisations. Finally, a brief testimonial from a beta customer underscores the platform’s impact on lead generation. Splitting each of these sections into its own chunk ensures that the retrieval system can deliver targeted answers without mixing information.

- Using keyword tools to guide chunking - Selecting the right topics and subtopics is essential when planning your content. Using a keywords explorer, you can enter the core topic and see related questions and long‑tail queries. Each query that has a significant search volume deserves its own section in your blog or report. While we cannot display the actual tool here, please insert a screenshot of the relevant keyword data where indicated below.

When you look at the Ahrefs keyword “chunk optimization”, you see clear clusters emerging. Some of the terms match like: chunking, chunking meaning, chunking strategies for rag. You need to match the intent of the keyword to the terms match.

You can also have a look at the commonly ask questions like:

- What is chunking?

- What is pacing and chunking?

- What is chunking in NLP?

Once you know the key questions, structure your document accordingly. Each subtopic should be chunked into its own unit with a clear heading. This approach not only improves AI retrieval but also makes your content more accessible to human readers.

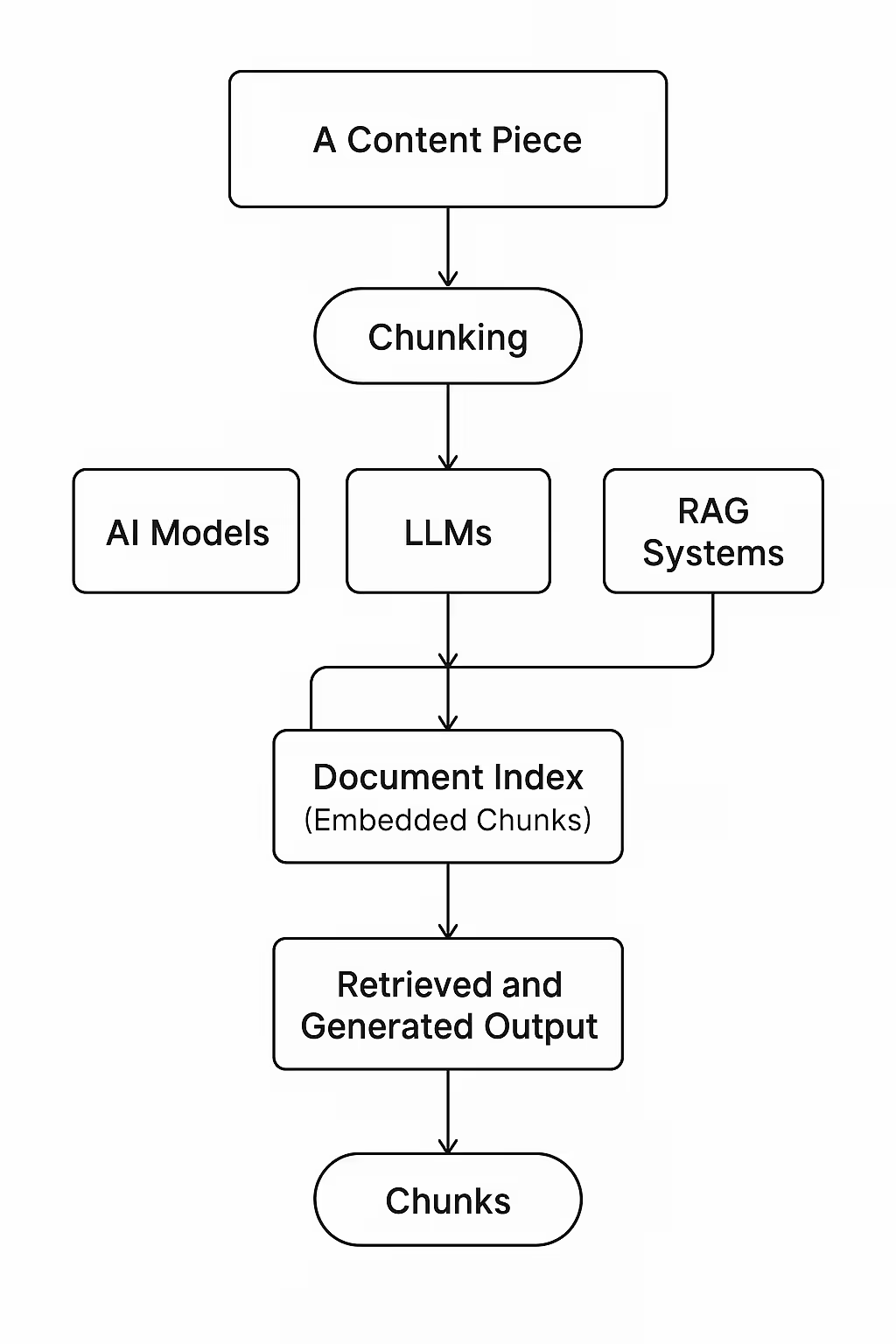

Have a look at entire journey of a content piece, from raw text to chunked, indexed, and retrievable units inside AI and RAG workflows.

From chunking to indexing: your roadmap to AI‑ready content

This blog article discussed in detail about the importance of chunk optimisation, the limitations of traditional SEO, the various chunking strategies and the critical aspects of index planning. To summarise, building an AI‑ready knowledge base requires:

- Auditing your data sources and organising them by relevance and audience.

- Choosing appropriate chunking methods and sizes based on content type and query needs.

- Ensuring each chunk is semantically coherent and self‑contained.

- Adding metadata tags that reflect product names, regions, personas and campaign associations.

- Designing a hierarchical index with summaries, paragraphs and sentences to support different query types.

- Using hybrid retrieval strategies combining semantic, keyword and metadata filters.

- Continuously evaluating retrieval accuracy and refining chunk sizes, overlaps and filters.

By investing in chunk optimisation and index planning, you transform your AI from a generic chatbot into an insightful assistant that understands your brand, speaks in your voice and answers with authority.

This approach empowers marketing leaders to make data‑driven decisions, accelerate content creation and deliver personalised experiences at scale.

What is AI Search Fusion: How Is It Changing SEO Forever?

.avif)