Why AI Gives Different Answers To The Same Question?

TL; DR

- AI does not return one fixed answer like Google. It builds responses in real time using context, source extraction, and probability.

- Conversation history changes the output. What a user discussed earlier shapes how the same question is understood next.

- Identical wording is rarely processed identically. Small shifts in phrasing or structure can change the model’s reasoning path.

- Web-enabled AI does not run one search. It launches multiple internal sub-searches, pulls different pages, and then synthesises an answer.

- Brand visibility now depends on clarity rather than traditional rankings. If a brand is described inconsistently across sources, AI avoids mentioning it to reduce uncertainty.

Does AI give the same answers to everyone?

Many marketing leaders have seen this scenario play out: you ask an AI tool a question, get an answer, share it with a colleague, and they ask the same thing, only to receive a noticeably different response.

Different brands mentioned, different framing, sometimes even different conclusions. It feels wrong because search engines trained us to expect consistency.

AI does not return one fixed answer. Modern LLMs generate responses in real time using context, probabilities, and the sources available at that moment.

The output depends on who is asking, what came before the question, when it was asked, and how the system interprets intent in that moment.

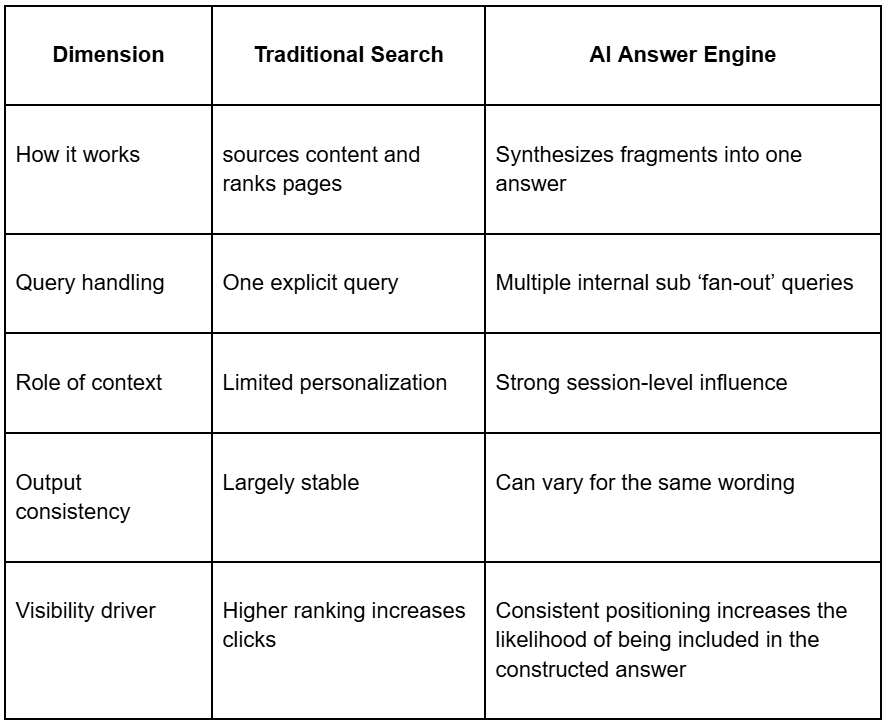

Once you see this clearly, you stop thinking of AI as a search engine and start thinking of it as an answer engine.

This is exactly why LLM visibility work has shifted toward a contextual and intent-led approach.

Here is a table for you to better understand how a traditional search engine and an AI answer engine handle and process a query -

How does user context quietly shape what AI decides to say?

AI may not store long-term memory, but it does respond based on session context.

Recent prompts shape how the next question is interpreted.

If your recent questions were about finance, risk, or compliance, the system interprets your next question through that lens.

If someone else spent the last 20 mins asking about design, analytics, or automation, the same wording would trigger a different internal response.

This is not personalization in the classic CRM sense. It is contextual consistency. The model tries to stay coherent within the flow of the conversation.

Search engines only lightly adjust results using location or history. AI systems adapt more aggressively to conversational context.

If you are trying to understand why the output changes, it is because the system is contextual and intent-led in its interpretation of the same prompt.

Are identical questions ever truly similar to an AI system?

From a human perspective, identical wording feels definitive. However, to a model, it rarely feels like that.

A prompt is never standalone. The model interprets it inside the full conversational flow, which is why even identical wording can produce different outputs.

Even minor details influence this. Punctuation, phrasing, or sentence structure can shift how the model reasons about the question.

A question mark versus a full stop, a missing comma, or a slightly different word order can alter the reasoning chain. When you type into an AI interface, you are not sending a standalone query. You are extending an ongoing conversation.

This is why people ask, does ChatGPT give different answers every time? Therefore, they feel uneasy when the answer is yes.

What happens behind the scenes when AI searches the web?

The biggest misunderstanding marketers have is assuming that AI pulls the same sources for everyone. In reality, when AI tools operate in web-enabled mode, they do not run one query. They generate multiple internal subqueries, often called fan-out queries.

These subqueries are shaped by phrasing, context, timing, and even which model version is active.

The system then sends those subqueries to search engines through APIs. Different queries retrieve different pages.

The model reads sections from those pages and assembles a single answer from fragments rather than copying any one source.

If the fan-out queries differ between users, the retrieved pages differ. If the retrieved pages differ, the final answer diverges.

This is why AI gives the same answers to everyone: it's not a simple yes-or-no. It depends on what the system retrieves and how it synthesizes.

Why do some brands disappear from AI answers entirely?

There is another layer most teams miss: source confidence. AI systems avoid uncertainty. When information about a brand is inconsistent, contradictory, or poorly defined across sources, the model often removes the brand from the final answer.

This is why brands can vanish from AI-generated answers even when they rank well on Google. The issue is not relevance. It is clarity. If a brand is described differently across websites, roles are unclear, or positioning shifts from page to page, the model loses confidence and excludes it.

This breaks the classic SEO mindset. Visibility is no longer only about ranking. In SEO for LLMs and AI search, it is about being confidently understood.

How is AI constructing answers instead of ranking results?

Search engines return ordered lists. AI tools operate differently. They evaluate which sources align with the question, which explanations align with one another, which concepts fit the context, and which brands send consistent signals.

The answer is determined by probability, not position. Two users asking the same question are not competing for rankings. They are activating different probability calculations. That is why the output changes.

This is the fundamental shift. AI is not selecting a winner from a list. It is building an answer from fragments that look consistent, safe, and relevant in that moment.

What does this mean for brand visibility in an AI-driven world?

If answers are constructed dynamically, pulled from different sources, filtered by confidence, and shaped by context, then ranking alone is no longer enough.

Brands need to be understandable everywhere the model looks.

This is where search engineering becomes critical. Traditional SEO helps you appear in search engine results.

Search engineering ensures that wherever answers are being built, your brand is clear, consistent, and credible.

Consistency of explanations, depth of expertise, alignment across pages, and clarity of positioning become practical LLM visibility levers. The goal is not just to be found. It is safe for the model to include.

The shift from ranking to reasoning is already here

Two people can ask the same question and still get different answers. This happens because the system is not pulling a fixed result from a single index.

It works by leveraging the conversation context, generating fan-out retrieval, pulling from different sources, and adjusting confidence based on what it finds.

AI answers vary because they are constructed rather than retrieved. Visibility now depends on being consistently understood across the ecosystem.

How Large Language Models Rank and Reference Brands?