Are Open Source LLMs Right for Your Enterprise When You Weigh Costs, Risks, and Capabilities?

Why is everyone talking about open source LLMs?

The generative AI wave has moved from lab curiosity to boardroom agenda. Executives are no longer asking if they should deploy large‑language‑model (LLM) capabilities; the real question is how to do it in a way that aligns with budgets, safeguards the brand and delivers real value. Surveys reveal that more than three-quarters of companies using LLMs now prefer open models.

Many teams are also realizing that model choice impacts visibility, because Large Language Model SEO (LLM SEO) is quickly becoming part of the same enterprise decision as cost, risk, and capability.

A desire for cost control, data sovereignty and flexibility has driven this shift. Yet open models are not a silver bullet. Running a large model on your own infrastructure comes with its own risks and hidden costs.

This blog article explores whether open-source LLMs make sense for your enterprise when you consider the full equation: costs, risks, and capabilities.

It distils insights from recent market data and early adopters' experiences to give CMOs and digital transformation leaders a balanced perspective.

Where is the actual cost in open source LLMs?

When evaluating a new technology, leaders naturally look at the price. Open source LLMs are often described as “free” because there are no license fees, but that can be misleading. Running even a medium‑sized model requires specialised hardware, networking and ongoing maintenance. Consider the differences:

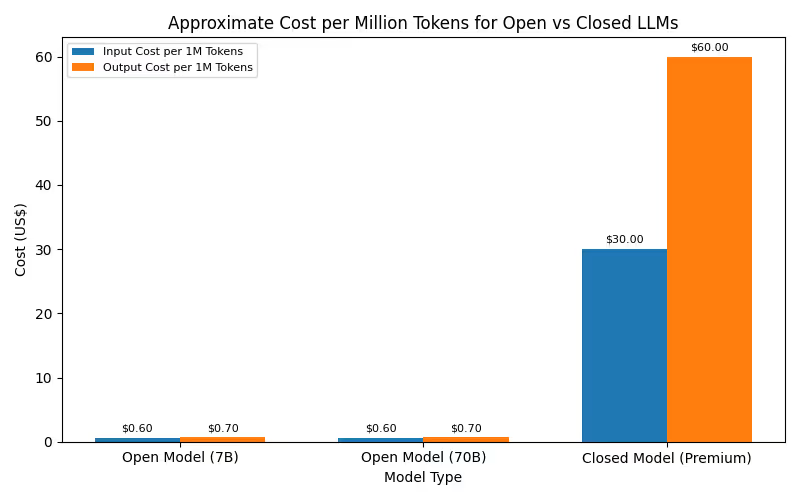

- Licence fees vs. infrastructure spend: Proprietary models charge per‑token usage. Recent benchmarks show that a premium closed model can cost around $30 per million input tokens and $60 per million output tokens. In contrast, open models may cost less than a dollar per million tokens.

- On the surface, this looks like a clear win for open source, but the hardware costs needed to serve those tokens at scale can quickly add up. Organisations without existing GPU clusters must invest in servers or cloud capacity to run 7‑billion- to 70‑billion‑parameter networks efficiently. For instance, a 13‑billion‑parameter model can require 32 GB of GPU memory.

- On the surface, this looks like a clear win for open source, but the hardware costs needed to serve those tokens at scale can quickly add up. Organisations without existing GPU clusters must invest in servers or cloud capacity to run 7‑billion- to 70‑billion‑parameter networks efficiently. For instance, a 13‑billion‑parameter model can require 32 GB of GPU memory.

- Operational expenses and talent: Building and maintaining LLM infrastructure demands DevOps, MLOps and security expertise. Fine‑tuning, monitoring for drift, and implementing robust logging are not trivial tasks. If your team lacks these skills, you may face delays or the need to hire specialists. Proprietary providers wrap all of this into their subscription prices, offering managed infrastructure and service-level agreements (SLAs).

- Hidden costs and scaling considerations: Open models eliminate licence fees, but scaling usage can still be expensive. Running a model 24/7 incurs energy and cooling costs. Upgrading GPUs when a new, larger model is released adds capital expense.

- Meanwhile, closed‑source providers operate on a usage-based model: while the price per token is higher, spend scales with demand and does not require the enterprise to buy hardware upfront.

- Many brands find that hybrid strategies, using open models for controlled internal workloads and closed models for public interactions, provide the best cost‑to‑value ratio.

- For marketing leaders, the cost model is not only about inference spend. It also shapes how quickly you can operationalize SEO for LLMs and AI search across content workflows, product knowledge, and brand safe answers.

The chart below visualises the relative per‑million‑token cost of open versus closed models. Even when open models are cheaper per token, the difference in infrastructure investment often narrows the gap.

What are the real security risks of open source LLMs?

Cost is only one side of the equation; there is another risk side too. Generative models are probabilistic systems, and the very openness of open source models introduces novel attack surfaces. Enterprises using LLMs report exposure to a handful of unique risk categories:

- Data leakage and confidentiality: Open models run on infrastructure you control, yet they often require access to vast amounts of internal data during fine‑tuning. Without rigorous masking and sanitisation, sensitive information may be memorised and inadvertently reproduced. Articles on enterprise LLM security highlight the risk of confidential details leaking through chatbots or summarisation tools. Open models may also continue to store or process inputs in logs, adding exposure if access controls are lax.

- Prompt injection and hallucinations: Attackers can craft inputs that override system instructions or cause the model to generate malicious or nonsensical responses.

- Cases of prompt injection have resulted in models divulging system secrets or executing unintended commands.

- Hallucinations, plausible but false outputs, represent another reputational risk, particularly when the model is used for decision support or customer interactions.

- Over‑permission and shadow usage: When rolling out open LLMs, many organisations grant broad access to maximise functionality. Over time, these permissions can accumulate without review, creating a wide attack surface. Additionally, employees may experiment with unsanctioned models (“shadow AI”), which exposes data to unvetted platforms.

- Supply chain and compliance: Because open models can be downloaded and modified by anyone, there is a risk that malicious code or backdoors are embedded in weights or dependencies.

- Ensuring that training data, code, and infrastructure comply with industry regulations (such as GDPR, HIPAA, or regional data residency laws) becomes the responsibility of the enterprise charter global.

- Ensuring that training data, code, and infrastructure comply with industry regulations (such as GDPR, HIPAA, or regional data residency laws) becomes the responsibility of the enterprise charter global.

These risks are not unique to open models, but they are magnified when organisations manage the entire stack themselves. Proprietary providers invest heavily in security certifications and compliance frameworks, which may be attractive for highly regulated industries.

Regardless of the approach, effective governance is essential. Policies should cover prompt design, access control, audit logging and continuous monitoring. Many firms implement an internal AI risk committee to oversee model selection, testing and deployment, ensuring that no single function makes decisions in isolation.

Do open models deliver comparable capabilities and performance?

Performance and flexibility are often touted as reasons to choose open source. Yet the reality is nuanced; here’s how:

- Customisation and control: With open models, you have full access to architecture and weights, enabling fine‑tuning, adaptation to domain terminology and integration with proprietary datasets.

- This control can produce models that outperform generic models on specialised tasks, an appealing prospect for businesses with unique vocabulary or workflows.

- Closed models typically restrict customisation to prompt engineering and minor parameter adjustments. If your use case requires deeply embedding industry‑specific knowledge, open source provides that freedom.

- This is also where LLM SEO optimization techniques matter most, because the enterprise needs consistent terminology, entity alignment, and compliant answer generation across channels, not just a smart model in isolation.

- Performance variability: Not all open models are created equal. Benchmark tests show that some open models approach or even exceed the accuracy of commercial models, while others lag.

- Enterprises that pick smaller models for lower cost may sacrifice response quality or reasoning capability.

- Closed models, on the other hand, invest in large training datasets and benefit from continual optimisation.

- They often provide more consistent performance across a broader range of tasks, including multilingual support and complex reasoning.

- Support and ecosystem: Open source thrives on community contributions. Knowledge, bug fixes and enhancements emerge quickly from a global network of users.

- However, there is no guarantee of timely help when something goes wrong. Proprietary providers offer formal SLAs, dedicated customer support and enterprise‑grade documentation.

- This reliability can be crucial when your model powers customer‑facing interactions where downtime is unacceptable.

- Ease of deployment and scalability: Closed models typically offer plug‑and‑play APIs that integrate into existing workflows with minimal setup. They also scale seamlessly across multiple regions and provide built‑in compliance features.

- Open models require careful orchestration of containers, load balancers and GPU clusters. Without this infrastructure, latency can spike, and user experience suffers.

- Companies with strong engineering teams may embrace the challenge; others may prefer the convenience of vendor‑managed solutions.

Not all open models will meet your quality needs out of the box. If you have the data and expertise to customise a model, open source can yield impressive results. For generalised tasks like marketing copy or multilingual customer support, a proprietary model might perform better.

Many organisations ultimately choose a hybrid approach using open models for internal analytics or regulated data, while adopting closed models for high‑stakes public interfaces.

Which use cases play to the strengths of open and closed LLMs?

Selecting the right model begins with clarifying the outcomes you need. The following scenarios show where open and closed models excel:

Open models are ideal when:

- Data sensitivity is paramount. Sectors such as healthcare, finance and legal often require on‑premises deployment due to privacy requirements. Fine‑tuning an open model behind your firewall ensures full control over sensitive data.

- Domain‑specific knowledge matters. Manufacturers or logistics firms may train open models on proprietary vocabulary and processes to build specialised co‑pilots that deliver deeper insights than general models.

- Regulatory compliance demands transparency. Open architectures allow auditors to inspect how data flows through the system and how outputs are generated. This auditability can simplify compliance reviews.

Closed models are best when:

- Rapid deployment and time‑to‑value are critical. Startups and mid‑sized companies often integrate API‑based models to roll out features without building an AI infrastructure from scratch.

- Multilingual and broad domain coverage is needed. Closed models often excel in general reasoning and support dozens of languages, which is beneficial for global customer service.

- Service level guarantees matter. Enterprises operating under strict uptime and response-time requirements rely on vendor‑provided SLAs.

Hybrid approaches combine both strategies. Companies may route specific tasks, such as summarising proprietary documents, to an open model and then use a closed model to refine the output for customer‑facing use.

Tools enabling multiple models are emerging, providing a “best of both worlds” configuration. In our view, hybrid strategies will dominate because they allow enterprises to tune the cost-quality-risk equation for each use case rather than settling for a one‑size‑fits‑all model.

How do we weigh cost, risk and capability side by side?

This table summarises the differences between open source and proprietary LLMs across key factors. It synthesises research insights and our experience advising enterprises on generative AI strategy.

What frameworks guide responsible adoption?

Even with a clear comparison, the decision is rarely binary. Successful AI initiatives share common practices that point out the open versus closed debate:

- Establish a governance model. Define policies for model selection, prompt design, data intake, and output handling. Adopt frameworks such as the AI security top‑ten list to anticipate prompt injection, data poisoning, and over-reliance. Ensure logging and auditing are built in from the start. Companies that embed risk management into early pilots move faster during scaling phases.

- Invest in talent and education. Technical depth is a prerequisite for the success of open models. Upskill existing teams or hire specialists in machine learning operations, cybersecurity and data engineering. Provide training on safe prompting to reduce errors and avoid injection attacks. Developer familiarity with open tools is highly valued.

- Start with high‑value, low‑risk use cases. Early wins build confidence. Internal analytics, content summarisation and code assistance are good candidates. Avoid customer‑facing deployments until you have established robust monitoring and fallback mechanisms in place.

- Plan for multi‑model orchestration. The landscape is evolving rapidly; new models emerge monthly. Build flexible interfaces that allow you to switch models or combine outputs as needs change.

- Measure impact and iterate. Track key performance indicators such as response time, accuracy, scalability and return on investment. Compare costs and outcomes across different model types. Use experiments to optimise for each use case rather than adopting a single model across all functions.

Choosing the right LLM strategy for scale, safety, and ROI

Open source LLMs are not a cure, nor are they a fringe experiment. They offer powerful advantages, lower per‑token costs, the freedom to customise, and the ability to keep data under your control.

However, they also demand sophisticated infrastructure, robust security practices and a skilled team to manage them. Proprietary models simplify deployment and deliver consistent performance, but they come with higher per‑use costs and less flexibility.

For CMOs and technology leaders, the answer lies in aligning the model choice with business priorities. If your organisation values full data sovereignty and has the technical maturity to build and operate AI systems, open source is a compelling option. If speed, reliability and out‑of‑the‑box quality are paramount, proprietary models may justify their cost.

Many enterprises will blend both, leveraging open models for internal intelligence and proprietary models for external‑facing tasks. The key is to make deliberate choices informed by a clear understanding of cost, risk and capability trade‑offs.

How Large Language Models Rank and Reference Brands?