Are keywords really dead, or do LLMs still rely upon them?

TL;DR

- Keywords are for advertisers to plan budgets; queries are how users ask questions; prompts are instructions for AI reasoning systems.

- Old SEO was about helping a system find a page; new SEO is about helping a system construct a clear, reasoned answer.

- LLMs prefer specific, situation-based information over broad, neutral content, favouring pros, cons, and constraints.

- Success is measured by whether your brand appears in the AI’s decision tree when it generates a solution for a user.

- To remain visible, brands must shift toward content designed for reasoning, often requiring strategies such as AI content chunking for enterprise pages.

Who is this blog for?

- CMOs and Marketing Leaders: Those tasked with maintaining AI search visibility for SaaS brands while navigating declining click-through rates from traditional SERPs.

- SEO Professionals: Experts moving beyond basic rankings to understand what LLM SEO means in a generative ecosystem.

- Enterprise Content Managers: Teams managing vast amounts of data who need to implement AI content chunking for enterprise pages to make their assets machine-reasoning friendly.

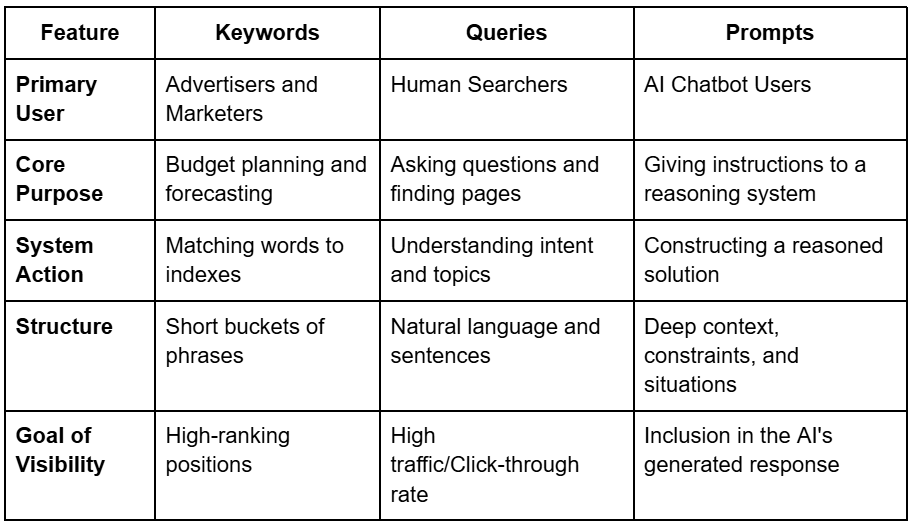

A comparative analysis of keywords, queries, and prompts

The fundamental misunderstanding in modern marketing is treating keywords, queries, and prompts as interchangeable.

They represent three distinct eras of search technology and user intent.

Are keywords really dead?

It’s the dramatic headline that seems to pop up every time search technology takes a leap forward, but the short answer is: no, keywords are not dead.

We shouldn't rush to bury them just yet, as they still play a vital role in the planning phase of marketing.

The key to understanding their survival lies in why Google still maintains the Keyword Planner. The term "keyword" is used specifically when planning and budgeting are involved; advertisers still need those keyword buckets to forecast their spending and build out the structure of their campaigns.

In this context, keywords are a tool for marketers to organise their world.

However, the sources are clear that while keywords aren't dead, they are no longer the end goal of SEO.

The shift is happening because:

- Users don't think in keywords: They speak in sentences, describe their problems, and ask questions through queries.

- AI doesn't just match words: Reasoning systems like ChatGPT use prompts, which are essentially instructions to decide how to think about an answer, rather than just finding a page that contains a specific phrase

If keywords are outdated, why does Google still call it the keyword planner?

It is easy to assume that the term keyword is a relic, but Google's continued use of it is intentional.

Google uses the word ‘keyword’ specifically when money and planning are involved.

Advertisers require structured keyword buckets to create ad campaigns, forecast spend, and structure their ad copy. It is a tool for the buyer, not necessarily a reflection of the seeker.

In contrast, Google uses the term "query" in Search Console because that is where the user is directed to think.

While SEO has already evolved to focus on the intent behind these queries, moving toward topical authority and internal linking, the rise of the prompt marks a shift many still fail to grasp.

Understanding how LLMs collect information starts with recognizing that they don't just see a query; they see a problem to solve.

The acronym GPT stands for Generative Pre-trained Transformer. This name is critical because it defines the system as a reasoning system rather than a mere search engine. When you interact with a GPT-based model, you are chatting with a system that requires instructions.

Traditional keywords were designed to help a system find a page. Queries were designed to express what a user was asking but a prompt tells the system how to think about the answer.

We at FTA Global, see the queries to prompts shift clearly. Winning in LLM search is identifying the buyer's statement problem and understand their queries and then niche it down naturally into prompts for LLMs to understand.

If you are evaluating LLM optimization services in Bangalore, look for a partner that knows the entire LLM visibility stack and not just SEO, but how models interpret, select, and cite information.

How do LLMs retrieve information?

To understand the technical side of this shift, we must look at how an AI constructs an answer.

Once a user provides a prompt, they aren't looking for a list of pages; they are looking for a comparison, usually between two entities.

Consider the evolution of a search:

- The Keyword: best CRM for startups

- The Query: Which CRM is best for a startup?

- The Prompt: What CRM should a 50-person team use if onboarding is slow, sales follow-ups are getting missed, and the team wants something simple that scales?

In the prompt scenario, the AI acts as a personal assistant. It isn't trying to match the words "CRM" and "startup" to a database.

Instead, it is trying to construct the best possible solution based on the specific constraints of team size, slow onboarding, and scalability.

If your content is merely a keyword-optimised list of features, the AI cannot use it to reason through the user's specific problem.

This is why AI search visibility for enterprise brands is becoming increasingly dependent on situational content.

Why high keyword rankings no longer guarantee LLM visibility?

Many brands are currently experiencing a confusing phenomenon: their SEO metrics look healthy, their pages rank well for many keywords, but their click-through rate is declining.

Traditional content outlines are often defined by keywords and include broad definitions, generic features, and standard benefits.

While this content can rank well on Google, AI systems often ignore it.

When an AI asks, Does this page help me explain the answer clearly?, a broad, neutral page offers no value.

Reasoning systems prefer clarity and specificity in content. They look for content that defines:

- The specific type of user the product is for.

- The exact situations where a tool should be applied.

- The battle cards clearly present the pros, cons, and reasons to choose or avoid a product.

If your content does not provide these reasoning blocks, the AI has no reason to include your brand in its answer.

Why is AI content chunking essential for enterprise data?

Chunking is the process of breaking down long-form content into smaller, semantically meaningful segments. This allows LLMs to gather only the most relevant chunk of information to answer a specific prompt, rather than trying to parse a 5,000-word page of generic text.

For large organizations, this is the frontier of Search Engineering.

Without proper AI content chunking for enterprise pages, your most valuable insights may be buried under a mountain of neutral text that the AI deems irrelevant to a specific user's prompt.

Why do only specific brands keep showing up in AI answers?

Visibility in the age of AI feels selective because search is now about deciding answers, not just finding pages.

You may see a brand with lower domain authority appearing in a ChatGPT or Perplexity answer, while a giant in the industry is left out. This happens because the smaller brand provided the specific reasoning path the AI needed.

The primary question for modern marketers has changed from What keyword should I rank for? In what LLM model should my brand appear?.

When you think in terms of decision trees, you realise your brand needs to be the solution to a specific set of problems and constraints.

Authority alone is no longer enough; the AI must trust that your brand provides the clearest, most specific solution to the user's instruction.

The new LLM SEO era of queries and prompts

At the end of the day, the shift we’re seeing in search isn't because SEO is "broken." It’s happening because search engines have evolved from simple librarians to personal assistants.

If your content is still just a list of broad definitions and generic features, you might keep your high ranking on a traditional results page, but you’ll likely be invisible in the AI-generated answers that users are actually relying on for guidance.

Apply LLM SEO optimization techniques by turning your highest value pages into decision frameworks. Make the use case explicit, name the constraints, state the trade-offs, and show alternatives. When AI can clearly explain why your brand fits, it is far more likely to include you.

How Large Language Models Rank and Reference Brands?