Why AI-Driven SEO Needs Data Hygiene More Than Keywords?

As of mid-2025, 13% of all US desktop searches already trigger an AI-generated overview in Google results, up from just 6.5% in January, according to Semrush. That’s a rapid doubling in under three months, showing how fast AI-driven search is reshaping visibility.

In this new environment, your ranking is no longer decided by keyword density but by how clean and structured your data is. The algorithms now read your site the way a machine would: scanning for crawl errors, schema accuracy, and consistent entity signals.

When those signals are noisy or broken, AI systems can’t confidently cite your content. A strong AI SEO strategy depends first on data hygiene, SEO, and SEO automation to maintain data quality for ranking. Keywords still matter, but they come after structure and trustworthiness. The new rule of visibility is simple: if your data is clean, AI will recognise you.

AI SEO strategy starts with data hygiene, not keywords

Generative answers and AI overviews are pulling facts, entities, and relationships from your site and the wider web. If crawlers hit broken architecture, thin metadata, or duplicate pages, your brand becomes invisible to AI surfaces, regardless of keyword density. Semrush has formalized this shift by adding an AI Search category inside Site Audit to prepare sites “to be found and cited by AI search engines.” That matters more than squeezing a few extra head terms into a paragraph.

There are enough reports to confirm that neat, well-cited brand webpages appear across ChatGPT, Google AI modes, and Perplexity. If your information architecture is inconsistent, these systems won’t safely cite you. Tracking those AI citations pushes teams to fix data quality before chasing keywords.

AI ranks and cites what it can parse and trust. Data quality is your first lever. Keywords are second-order.

Why data quality for ranking is the new moat

You need to start thinking like an LLM. It requires clarity, consistency, and context.

- Crawlability and coverage

If bots cannot reach the right URLs, nothing else counts. Ahrefs’ Site Audit crawls entire sites, flags 170+ issue types, and rolls them up into a health score. This is data quality in action. - Canonical truth

Duplicate clusters and ambiguous canonicals dilute your authority. Hygiene fixes consolidate signals to the correct URL. Practical guides from Ahrefs and others position canonical correctness as one of the highest-leverage hygiene fixes. - Structured data and entities

A schema clarifies what a page is about, who authored it, and how it relates to your products and people. There needs to be structured-data checks that improve machine understanding and reduce hallucinations in AI answers. - Index hygiene and deindexation risk

“SEO hygiene” is essential to prevent deindexation. Index bloat, thin sections, and poor quality control invite volatility. Hygiene cuts the noise and stabilizes discovery. - Performance and UX signals

Speed, mobile usability, and stable rendering are table stakes. Audits surface regressions before they become ranking problems.

Consistent, crawlable, and marked-up data earns trust signals that AI systems can reuse. That’s what ranks, and that’s what gets cited.

Data hygiene SEO: the high-impact FTA checklist

This checklist gives marketers a structured rhythm to protect their brand’s visibility.

1. Crawl and index control

- Validate robots directives, XML sitemaps, and canonical rules against live behavior.

- Remove or noindex thin, duplicate, parameterized, or staging URLs.

- Ensure paginated series resolve with clear canonicals and discoverable “view all” where appropriate.

- Re-crawl and verify deltas until the index aligns with your intended site map.

2. Information architecture

- Contain topic clusters in a single canonical hub, with internal links pointing inward.

- Standardize URL patterns, breadcrumb schema, and navigation labels.

3. Structured data and content metadata

- Implement and validate the schema for Organization, Product, Service, Article, FAQ, and Breadcrumbs, as applicable.

- Normalize titles, H1s, meta descriptions, and OG tags with templated rules.

4. Content integrity for AI content optimization

- Consolidate near-duplicates.

- Keep authorship and dates explicit.

- Add source citations, product specs, and FAQs.

- Refresh decaying URLs with updated facts and schema, not just new keywords.

AI overviews and generative engines rely on freshness and clarity.

5. Performance and reliability

- Measure real-user speed, image weight, CLS, and JS bloat.

- Gate new releases on performance budgets.

Monitoring and alerting

- Configure always-on crawls and alerts for spikes in 4xx/5xx, canonical mismatches, or schema errors.

- Set alerts for index coverage deltas and robot changes.

- If the indexable URL count or sitemap-to-index parity shifts by more than a set percent in 24 hours, trigger an incident.

- Include unexpected robots.txt edits and sudden spikes in meta noindex.

Auditing and cleaning data quarterly, teams maintain consistent crawlability, schema accuracy, and content freshness, thereby improving trust signals, ranking stability, and AI-driven search performance.

Do not compromise on your rankings with automation

Automation is not about more output. It is about fewer defects and faster repair loops that keep visibility stable.

- Automated audits that matter

Run scheduled crawls to surface crawl traps, redirect loops, orphan pages, broken canonicals, and schema errors before traffic drops. Treat the output as engineering work, not a marketing backlog. - Impact-first triage

Collapse hundreds of warnings into a short fix list ranked by revenue risk, template breadth, and effort. Ship the top five issues each sprint and re-crawl to verify the fix. - Trend reporting for leaders

Track total open issues, time to fix, and proportion of clean templates. If those lines improve, rankings and AI citations usually follow. - Monitor AI visibility

Add a simple scorecard for brand citations across AI search surfaces. Tie gains to specific hygiene fixes to justify the investment.

AI content optimization without screwing your dataset

Uncontrolled generation increases duplication, contradicts canonical facts, and worsens crawl waste.

AI, no doubt, helps in research, outlines, and QA. However, it should not bypass your fact layer or schema rules.

- Set guidelines first

Define a duplication policy, a schema matrix by template, and a fact checklist. Block publish if any of these fail. - Use AI to speed quality, not volume

Let models draft briefs, propose internal links, and flag stale facts. Route every draft through human fact checks and automated validators. - Continuously de-bloat

Merge near-duplicates, 410 low-value variants, and redirect retired paths. Reclaim crawl budget for canonical pages. - Prove it with data

Report on duplicate clusters removed, structured data pass rate, and indexable URL count. Tie those metrics to non-branded clicks and AI panel mentions.

Tools to operationalize

Writer or Grammarly Business for governed generation with style and terminology controls. Originality.ai or Copyleaks for duplication checks at scale. Schema testing with Google Rich Results Test plus JSON-LD linters in CI. Analytics and log analysis to verify crawl budget shifts after cleanups.

AI SEO tools and data-hygiene coverage

The table below compares leading SEO automation tools on the features that truly influence data hygiene and long-term site maintainability.

It highlights which platforms can audit deeply, validate structured data, monitor continuously, and support AI-ready visibility.

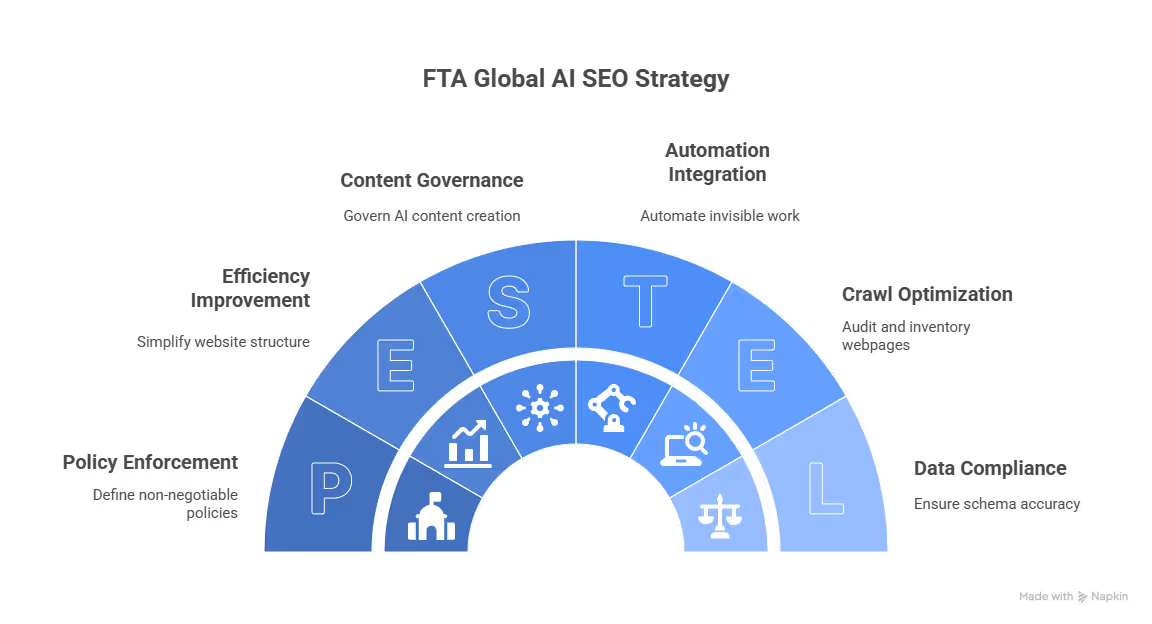

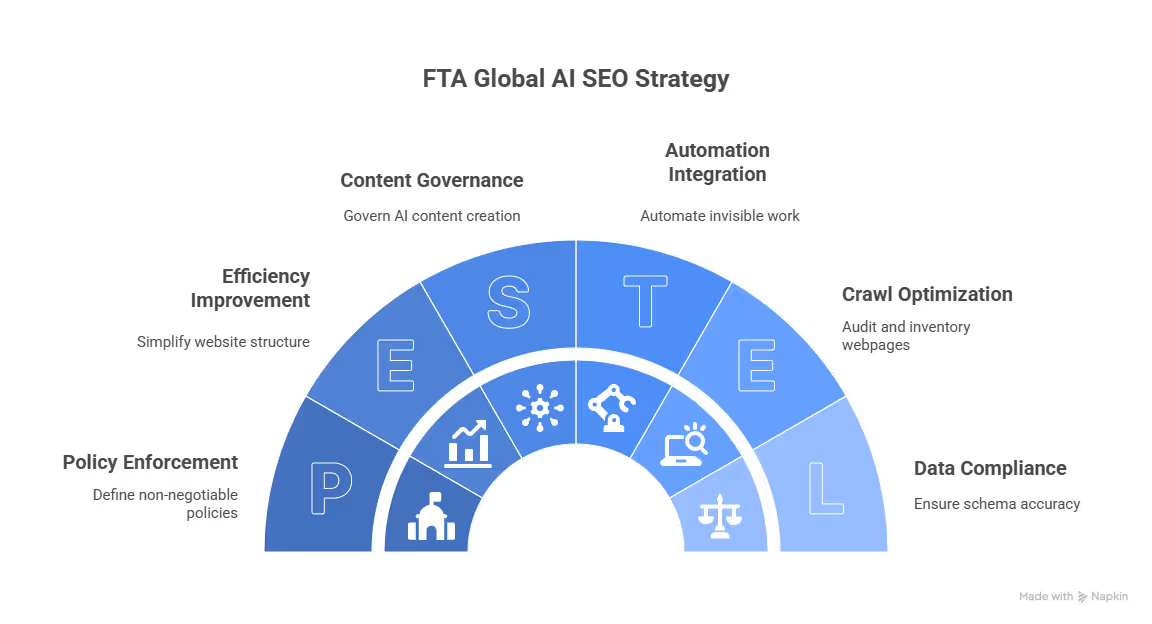

A proprietary FTA AI SEO strategy

1. Audit and inventory your webpages

Run a full crawl to see what search engines actually read. Map every indexable path, orphan page, redirect chain, and canonical conflict.

Followed by a run of an entity audit to confirm your brand, products, people, and locations are represented consistently and marked up in structured data.

2. Set non-negotiable policies

Create three quick policies:

- Schema policy: define required schema types and validation steps.

- Duplication policy: when to merge, redirect, or delete.

- Refresh policy: what “fresh” means per template and when to fact-check before republishing.

3. Automate the invisible work

Schedule crawls and real-time alerts for crawl traps, index drops, and schema issues.

Integrate alerts into your development tracker so fixes happen in sprint cycles, not later.

Use monitoring tools like ContentKing, Lumar, or JetOctopus to trend hygiene and visibility metrics.

4. Simplify the structure

Consolidate redundant hubs, flatten deep paths, and standardize metadata.

Clear architecture improves crawl efficiency and entity understanding, which is critical for AI visibility.

5. Govern AI content creation

Uncontrolled generation causes duplication, factual drift, and crawl waste.

Use AI for research and drafting, but enforce checks for facts, schema accuracy, and originality before publishing. Refresh proven pages with verified updates, not mass rewrites.

AI SEO strategy roadmap: 0-90 days

Days 0-15: stabilize

- Run full Semrush or Ahrefs audits. Fix critical crawl blocks, broken canonicals, redirect chains, and 4xx/5xx clusters.

- Remove index bloat. Submit clean sitemaps.

- Ship org-level schema (Organization, Logo, Social profiles) to establish entity authority.

Days 16-45: standardize

- Create metadata templates by page type.

- Implement a schema matrix by template with validation gates in CI/CD.

- Turn on recurring audits and hook alerts to issue tracking.

Days 46-90: scale what works

- Roll out a canonical hub-and-spoke model for 3-5 core topics.

- Launch AI search visibility tracking and benchmark against two primary competitors.

- Use content-generating tools and LLMs to accelerate content within those hubs, but enforce the hygiene checklist before publishing.

What “ a good data hygiene” looks like in 2025

- Your index is smaller and cleaner.

- Audit issue counts trend down quarter over quarter.

- AI panels and overviews cite your brand as a priority for queries.

- Organic traffic consolidates on canonical hubs rather than spreading across duplicates.

- Content velocity increases without raising duplication or fact errors.

That is the compound return of data quality for ranking.

AI-driven SEO is a data discipline

Get your data house in order, and the rankings, AI citations, and revenue follow. Chase keywords without hygiene and you’ll be invisible to the systems that now shape demand.

A proprietary FTA AI SEO strategy

1. Audit and inventory your webpages

Run a full crawl to see what search engines actually read. Map every indexable path, orphan page, redirect chain, and canonical conflict.

Followed by a run of an entity audit to confirm your brand, products, people, and locations are represented consistently and marked up in structured data.

2. Set non-negotiable policies

Create three quick policies:

- Schema policy: define required schema types and validation steps.

- Duplication policy: when to merge, redirect, or delete.

- Refresh policy: what “fresh” means per template and when to fact-check before republishing.

3. Automate the invisible work

Schedule crawls and real-time alerts for crawl traps, index drops, and schema issues.

Integrate alerts into your development tracker so fixes happen in sprint cycles, not later.

Use monitoring tools like ContentKing, Lumar, or JetOctopus to trend hygiene and visibility metrics.

4. Simplify the structure

Consolidate redundant hubs, flatten deep paths, and standardize metadata.

Clear architecture improves crawl efficiency and entity understanding, which is critical for AI visibility.

5. Govern AI content creation

Uncontrolled generation causes duplication, factual drift, and crawl waste.

Use AI for research and drafting, but enforce checks for facts, schema accuracy, and originality before publishing. Refresh proven pages with verified updates, not mass rewrites.

AI SEO strategy roadmap: 0-90 days

Days 0-15: stabilize

- Run full Semrush or Ahrefs audits. Fix critical crawl blocks, broken canonicals, redirect chains, and 4xx/5xx clusters.

- Remove index bloat. Submit clean sitemaps.

- Ship org-level schema (Organization, Logo, Social profiles) to establish entity authority.

Days 16-45: standardize

- Create metadata templates by page type.

- Implement a schema matrix by template with validation gates in CI/CD.

- Turn on recurring audits and hook alerts to issue tracking.

Days 46-90: scale what works

- Roll out a canonical hub-and-spoke model for 3-5 core topics.

- Launch AI search visibility tracking and benchmark against two primary competitors.

- Use content-generating tools and LLMs to accelerate content within those hubs, but enforce the hygiene checklist before publishing.

What “ a good data hygiene” looks like in 2025

- Your index is smaller and cleaner.

- Audit issue counts trend down quarter over quarter.

- AI panels and overviews cite your brand as a priority for queries.

- Organic traffic consolidates on canonical hubs rather than spreading across duplicates.

- Content velocity increases without raising duplication or fact errors.

That is the compound return of data quality for ranking.

AI-driven SEO is a data discipline

Get your data house in order, and the rankings, AI citations, and revenue follow. Chase keywords without hygiene and you’ll be invisible to the systems that now shape demand.

How Large Language Models Rank and Reference Brands?

.avif)