Are AI Assistants Stealing Your Discovery and Pipeline, and How Do You Win With LLM Optimisation?

Discovery is moving from 10 blue links to machine-generated answers in assistants and AI summaries. Your pages only matter when they are recognised as a trusted source and used in the answer.

LLM Optimisation is the operating system that enables this. It aligns content, structure, proofs, and distribution so models can find, trust, and use your brand with confidence. Your brand must show up the moment buyers ask a question. It should be cited with precise, verifiable claims. The outcome is faster conversions led by sharper, leaner content.

This blog article gives a complete LLM operating plan to insert into your LLM model. You will learn what changed in user behaviour, how models assemble answers, what to measure when rank disappears, which formats win, where to place data so models can use it, how to run a ninety-day sprint, how to govern claims, who owns what, and the minimal tool stack required.

It closes with the FTA’s Answer Blueprint. That is our proprietary asset that replaces the classic keyword brief and brings measurement and governance under one roof.

Are search engines still the starting point for discovery

Search behaviour is fragmenting across three surfaces. The first is the classic query-and-click. The second is AI summaries in results that settle intent with a paragraph and a short list of sources. The third is assistant-style chats that compare, explain, and decide without ever showing a results page. For many tasks, the first satisfactory answer wins. That answer often lives inside an assistant.

Three practical signals matter for CMOs to take note of -

- The first touch is now a synthesised paragraph, not a headline. Your job is to be cited or named inside that paragraph.

- Buyers ask full questions and tasks. They expect clarity, steps, and proofs. Thin category pages or generic blogs are skipped.

- When assistants cannot find a clear, current source of truth, they either guess or pull third-party summaries. That is where misquotes start.

What has changed in the last 12 months

- More tasks begin with a question or a job to be done.

- People accept answers inside the results or chat interface.

- Tolerance for click-through is lower unless the task continues on your site.

- Recency and provenance signals have become visible to users. They reward brands that update often and cite clearly.

Where assistants pull answers from and why that matters

Assistants mix three inputs. Pretrained knowledge. Retrieved passages from sources with clear structure and authority. Recency signals. Your influence grows when you provide clean, consistent, and verifiable passages for retrieval, mapped to the exact questions buyers ask.

Why strong brand signals reduce the cost of discovery

When a model can confidently resolve your entity, your facts are easier to surface. That means fewer assets create more presence. The path to lower cost per discovery is not producing more content. It is producing an obvious source of truth content with visible proofs that travel.

What is LLM Optimisation, and how is it different from traditional SEO

LLM Optimisation is the discipline of making your brand the preferred, cited source inside AI answers. It aligns four levers. Machine discoverable structure. Authoritative, question-aligned answers. Wide distribution into model-friendly surfaces. Measurement focuses on the presence, accuracy, and latency of answers rather than rank.

How large language models form answers

Models predict tokens based on the prompt and any retrieved context. Retrieval brings in exact passages from trusted sources. Confidence rises when the retrieved text is short, precise, and matches the phrasing of the question. Your work is to engineer that retrievable context. Use standard terms. Keep claims consistent across every surface. Explain the proofs near the claim.

Why keywords and backlinks alone no longer move the needle

Keywords help engines classify. Backlinks hint at popularity. Assistants need canonical facts with provenance. If your site does not present facts in clean, atomic form with proofs and structure, the model will prefer third-party summaries even when you rank well. The reward is shifting from positions to presence inside answers, supported by visible citations and correct phrasing of your claims.

Which business goals does LLM Optimisation actually move

- Inbound lead quality and speed to answer

When buyers see your product named and your strengths explained with proof inside the first answer, they arrive warmer, with fewer basic questions. Expect higher acceptance rates for discovery calls and faster movement to technical evaluation.

- Sales enablement inside buyer chat flows

Enterprise buyers now use chat inside CRMs and collaboration tools to shortlist vendors, list must-haves, and translate requirements into evaluation criteria. Optimised answers reduce friction later. Sales teams reuse the same canonical answers in proposals and in recorded demos.

- Brand safety, accuracy, and claim integrity

A governed source of truth content lowers the risk of misquotes. When a misquote appears, you have a playbook. Update the source. Log the change. Publish the correction. Instrument the questions so you can verify the fix inside the assistant within days.

How to measure visibility inside AI answers when there is no classic rank

Answer presence rate and share of voice inside assistants

- Define a question set per stage in the funnel.

- Run each question across the assistants that matter in your market.

- Record whether your brand appears in the synthesised answer or the visible citations.

- Share of voice is the fraction of total citations and mentions that belong to you.

Coverage of high-value questions along the funnel

- Awareness - Concepts, definitions, use cases.

- Consideration - Feature comparisons, fit by industry, and integration depth.

- Decision - Pricing qualifiers, security posture, legal terms, and implementation timeline.

- Adoption - Set up steps, troubleshooting, and ROI validation.

Coverage is the percent of these questions for which you have a canonical answer with proofs that an assistant can lift.

Accuracy score and hallucination risk

- Evaluate a sample of answers weekly.

- Mark true, partially true, false, or missing.

- Track misquote patterns. Location, phrasing, and likely source.

- Link each fix to a change in a specific source of truth page.

Latency and time to a confident answer

- Measure response time from prompt to answer for your questions.

- Lower latency usually correlates with cleaner retrieval and fewer cluttered sources.

- Treat large spikes as a signal to simplify the structure or add a structured summary.

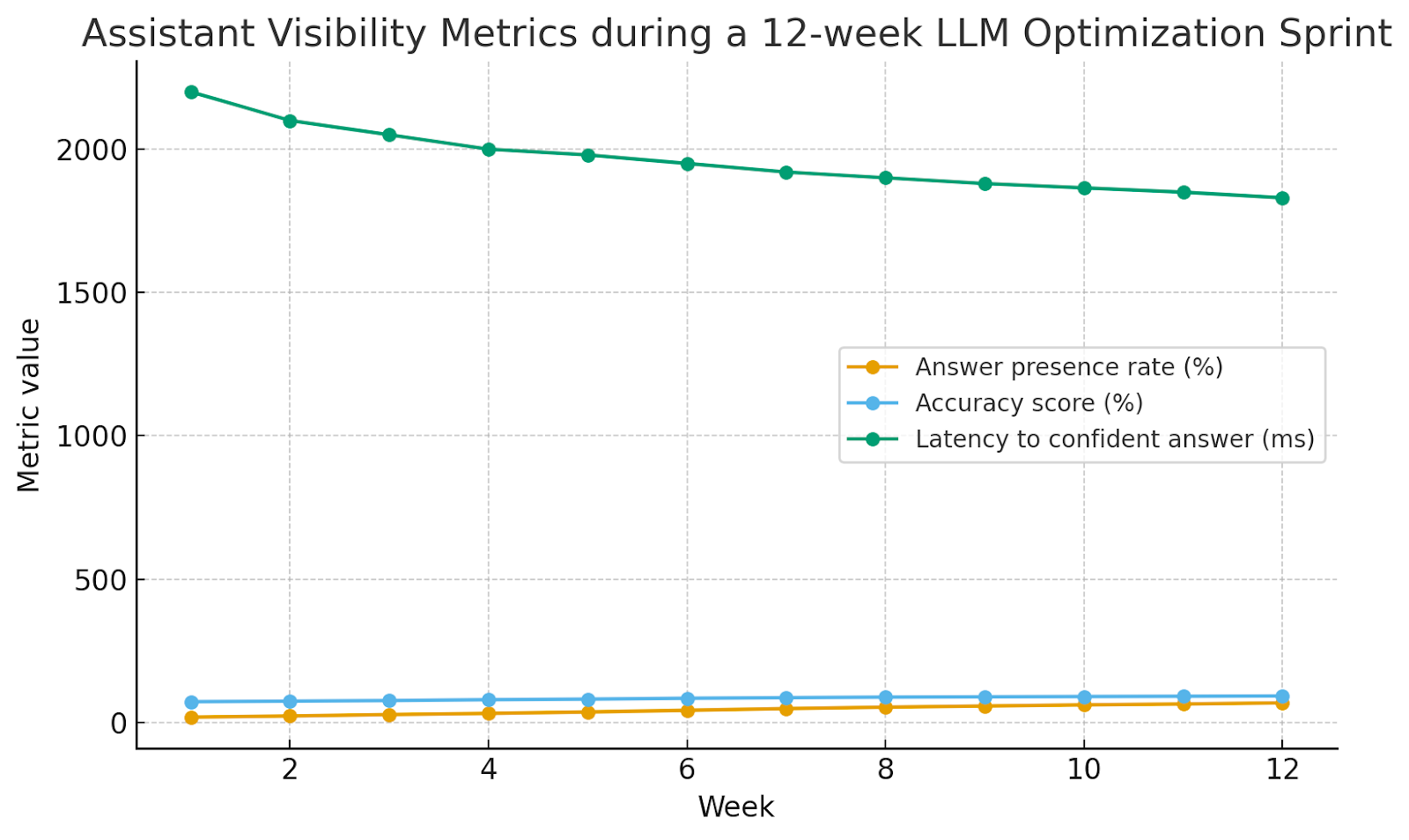

Graph 1. Assistant visibility metrics over twelve weeks

Use the following chart as a realistic benchmark for a focused program. Answer presence and accuracy rise week over week as structured sources and proofs go live. Latency falls as retrieval improves.

What content formats do LLMs prefer when assembling answers

Design principles

- One page. One canonical answer per question.

- Keep claims atomic. Use numbers, ranges, and units.

- Put the proof next to the claim.

- Provide a short, quotable statement under 120 words.

Formats that win

- Source of truth pages per product, feature, and policy.

- Q and A hubs organised by intent cluster and stage.

- Fact glossaries that define terms and acronyms.

- Comparison tables with standard attributes and explicit assumptions.

- Public proofs. Audit extracts, certification IDs, analyst quotes, partner listings, and signed statements.

- Calculators and checklists for tasks the assistant can lift step by step.

- Change logs with dates, versions, and the reason for change.

Formats that lose

- Long opinion pieces that bury facts.

- Generic listicles and farmed content.

- Unstructured PDFs without extractable text.

- Multiple conflicting versions of the same claim across pages.

How to structure your site and knowledge so LLMs can trust you

Intent

Make your brand the easiest to cite.

Decision trigger

Approve architecture and ops updates.

The FTA’s MUVERA model for machine-ready credibility

M. Machine discoverable structure

- Clean URL patterns.

- XML sitemaps for docs, FAQ, glossary, API, and policies.

- Schema for product, FAQ, how to, organisation, and breadcrumbs.

- Entity reconciliation. Use clear brand, product, and feature names. Link sameAs to your official profiles.

U. Useful intent clusters and task completion

- Group content by the jobs buyers and users want to complete.

- Make task completion the purpose of each page.

- Include short checklists, steps, and expected outcomes.

V. Verifiable sources, footnotes, and public proofs

- Link a proof to every numeric or regulated claim.

- Mirror key proofs on a neutral surface if licensing allows.

- Keep proofs short, stable, and easy to parse.

E. Expert authors and reviewer pages

- Show owners and reviewers with credentials.

- Map authors to topics.

- Publish the scope of responsibility and the last review date.

R. Reputable mentions and partner signals

- Catalogue analyst notes, awards, certifications, integrations, and marketplace listings.

- Use IDs and numbers where possible so models can reconcile proofs.

A. Active updates and change logs

- Add a visible last-updated date to every source of truth page.

- Publish a public change log for product, security, and pricing.

Link change entries back to the updated page.

Where to place data so models can actually find and use it

- Open source-friendly artefacts. Model cards, data dictionaries, and structured tables with clear licenses.

- Partner knowledge bases and developer hubs. Many assistants trust marketplace docs, support portals, and integration pages.

- Industry data portals and public repositories. Host CSV or JSON for reference tables that assistants can use as ground truth.

- Owned Q&A surfaces and community threads. Curate and link these to the source of truth pages.

- Public issue trackers for technical products. Closed issue notes act as verified troubleshooting references.

- Avoid duplicates. Point all variations back to one canonical location.

- Keep metadata clean. Titles, descriptions, and headers should match the canonical phrasing of the question and answer.

- Refresh cadence. Use version tags so models can pick the newest valid source.

What does a practical LLM optimisation sprint look like for a mid-market enterprise

Week 1 to 2. Audit the questions that matter

- Interview sales, solutions, and support. Build the top one hundred questions by stage and by segment.

- Map each question to existing pages and proofs.

- Score presence and accuracy inside assistants.

- Select the top ten questions with the highest revenue impact and the highest risk if misquoted.

Week 3 to 4. Create ten authoritative answers with proofs

- Draft canonical answers under 120 words per question.

- Publish one source of truth page per answer.

- Place the proof within three lines of the claim.

- Add FAQ and How To schema.

- Record the owner, reviewer, and review cadence.

Week 5 to 4. Publish structured facts, glossary, and a calculator

- Launch a glossary with clear, unambiguous definitions of your core terms.

- Expose reference tables in CSV and JSON.

- Build one calculator or checklist that solves a real task the assistant can adopt.

Week 7. Instrument visibility and accuracy

- Track answer presence, share of voice, accuracy score, and latency for the pilot question set.

- Set targets for week twelve based on the benchmark graph in this report.

- Run a red team test to provoke misquotes and document fixes.

Week 8 to 12. Iterate, expand, and brief sales

- Expand to thirty priority answers.

- Add partner proofs and independent corroboration.

- Build a talk track and proposal snippets that reuse the same canonical answers.

- Review the dashboard weekly with CMO and CPO.

- At week twelve, lock the scale plan for the next quarter.

How to govern claims so assistants never misquote you

Source of truth pages with signed statements

- Mark regulated or sensitive claims.

- Include the signing owner and date.

- Keep one canonical location for each core statement. Redirect variants.

Public change log for corrections

- Record what changed, when, and why.

- Link the old claim to the new claim.

- Publish a short notice when a high-risk correction is made.

Red lines on product claims and regulated language

- Maintain a list of prohibited phrases.

- Maintain a list of preferred phrasing that meets compliance and clarity standards.

- Route updates through a fast lane review with time-bound SLAs.

Escalation path when AI answers go wrong

- Capture the offending answer with timestamps and prompts.

- Identify the source that needs correction.

- Update the source and the change log.

- Notify affected customers if the risk is material.

- Re-test the same prompts and log the resolution.

Who owns LLM optimisation and how it scales smoothly

- CMO and CPO share the scoreboard. Answer presence, accuracy, and pipeline impact appear in the weekly business review.

- Content and product documentation run from a single backlog with one intake form. Each item has an owner, a reviewer, a target question, and a proof.

- Legal review is a fast lane with templates for claims, proof formats, and red lines.

- Data and analytics own the observability layer. They maintain the dashboard for presence, accuracy, latency, and coverage.

- Sales enablement uses the same canonical answers in talk tracks, proposals, and RFP libraries.

- Support and success contribute new questions and validate adoption content.

Tech Stack to Equip Your Team for LLM Optimisation

- Crawler and answer presence tracking. Discover where your brand appears inside assistants and summaries and how often.

- Fact store and citation generator. Centralise claims with links to proofs and export snippet-ready citations.

- Schema and glossary management. Validate structured data at scale and keep a single dictionary of terms.

- Annotation and evaluation workflow. Tag questions, mark accuracy, add remediation tasks, and track cycle time to fix.

- Optional retrieval sandbox. Test how your content is retrieved and quoted by an RAG pipeline before it reaches assistants.

- Optional prompt and policy vault. Store approved prompts, guardrails, and response templates for internal copilots.

The rule is simple. Every tool must either increase answer presence, increase accuracy, or reduce time to fix. Anything else is noise.

Why brand matters more than ever in the age of LLM answers

- Signals that travel. Analyst coverage, credible awards, integrations, and high-quality partner listings are machine visible and human persuasive.

- Clear point of view. Executives who publish specific, testable opinions on owned channels create anchor phrases that assistants reuse.

- Consistency across the stack. Sales sheets, docs, support macros, and web copy must share the same numbers and wording. Inconsistency invites misquotes.

What executives will see in six months if we do this right

- Higher presence across priority questions. Expect a visible rise in answer presence rate and citation share for your brand.

- Faster qualified inbound and shorter cycles. Expect better stage conversion and fewer clarification emails because canonical answers travel with the buyer.

- Fewer inaccurate answers. Accuracy scores rise while misquote incidents drop due to structured claims and proofs.

- Lower content waste. Fewer generic long-form posts. More atomic facts that power both public assistants and internal copilots.

- Clearer ROI. Link improvements in presence and accuracy with changes in pipeline velocity and cost to acquire.

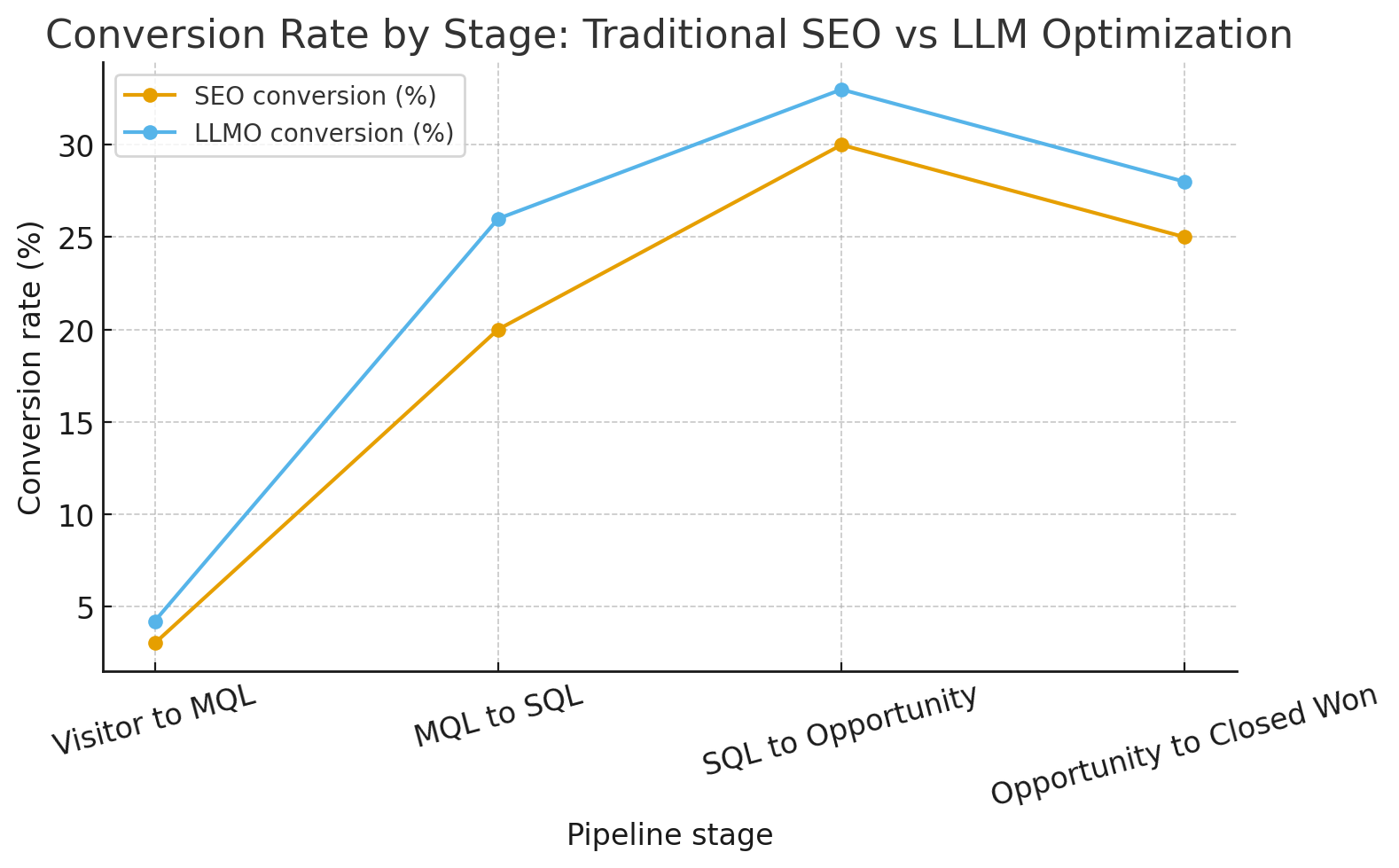

Have a look at this chart to explain the directional impact by stage. It shows typical conversion lifts and time reductions when assistants use your canonical answers and proofs.

Your first move in the next ten days

- Approve a ninety-day pilot with one accountable owner.

- Finalise the top one hundred questions by stage and segment.

- Publish ten source of truth answers with proofs and schema.

- Launch a glossary and a calculator that completes a core task.

- Deploy an assistant visibility dashboard that tracks presence, accuracy, latency, and coverage.

- Run a red-team review to identify and fix misquotes.

- Brief sales and support on the new canonical answers and update proposal templates.

- Schedule weekly reviews with CMO and CPO to remove blockers.

- Lock the scale plan and budget for quarter two based on week twelve results.

Implementation checklists

- Question and intent

- Canonical answer under 120 words

- Proof link and file

- Structured data type used

- Owner and reviewer

- Last updated date and next review date

- Change log link

- Distribution targets and version ID

Accuracy audit template

- Question

- Assistant used and prompt

- Presence status

- Citation status

- Accuracy score and notes

- Fix required and owner

- Date fixed and retest result

Distribution map

- Owned surfaces. Docs, FAQ, glossary, calculators, change logs, trust centre.

- Partner surfaces. Marketplaces, app stores, integration hubs, implementation partners.

- Public repositories. Data portals and research hubs where structured tables can live.

- Community and A. Moderated threads that link back to canonical answers.

Building Long-Term Advantage in the Age of AI Answers

Discovery has always rewarded clarity and authority. The interface has changed over time, but the rules have not. If you make your facts obvious, verifiable, and easy to retrieve, assistants will carry your message into the first answer.

That lowers friction for buyers and costs for you. The LLM Optimisation program in this report is the shortest route to that outcome.

Where to place data so models can actually find and use it

- Open source-friendly artefacts. Model cards, data dictionaries, and structured tables with clear licenses.

- Partner knowledge bases and developer hubs. Many assistants trust marketplace docs, support portals, and integration pages.

- Industry data portals and public repositories. Host CSV or JSON for reference tables that assistants can use as ground truth.

- Owned Q&A surfaces and community threads. Curate and link these to the source of truth pages.

- Public issue trackers for technical products. Closed issue notes act as verified troubleshooting references.

- Avoid duplicates. Point all variations back to one canonical location.

- Keep metadata clean. Titles, descriptions, and headers should match the canonical phrasing of the question and answer.

- Refresh cadence. Use version tags so models can pick the newest valid source.

What does a practical LLM optimisation sprint look like for a mid-market enterprise

Week 1 to 2. Audit the questions that matter

- Interview sales, solutions, and support. Build the top one hundred questions by stage and by segment.

- Map each question to existing pages and proofs.

- Score presence and accuracy inside assistants.

- Select the top ten questions with the highest revenue impact and the highest risk if misquoted.

Week 3 to 4. Create ten authoritative answers with proofs

- Draft canonical answers under 120 words per question.

- Publish one source of truth page per answer.

- Place the proof within three lines of the claim.

- Add FAQ and How To schema.

- Record the owner, reviewer, and review cadence.

Week 5 to 4. Publish structured facts, glossary, and a calculator

- Launch a glossary with clear, unambiguous definitions of your core terms.

- Expose reference tables in CSV and JSON.

- Build one calculator or checklist that solves a real task the assistant can adopt.

Week 7. Instrument visibility and accuracy

- Track answer presence, share of voice, accuracy score, and latency for the pilot question set.

- Set targets for week twelve based on the benchmark graph in this report.

- Run a red team test to provoke misquotes and document fixes.

Week 8 to 12. Iterate, expand, and brief sales

- Expand to thirty priority answers.

- Add partner proofs and independent corroboration.

- Build a talk track and proposal snippets that reuse the same canonical answers.

- Review the dashboard weekly with CMO and CPO.

- At week twelve, lock the scale plan for the next quarter.

How to govern claims so assistants never misquote you

Source of truth pages with signed statements

- Mark regulated or sensitive claims.

- Include the signing owner and date.

- Keep one canonical location for each core statement. Redirect variants.

Public change log for corrections

- Record what changed, when, and why.

- Link the old claim to the new claim.

- Publish a short notice when a high-risk correction is made.

Red lines on product claims and regulated language

- Maintain a list of prohibited phrases.

- Maintain a list of preferred phrasing that meets compliance and clarity standards.

- Route updates through a fast lane review with time-bound SLAs.

Escalation path when AI answers go wrong

- Capture the offending answer with timestamps and prompts.

- Identify the source that needs correction.

- Update the source and the change log.

- Notify affected customers if the risk is material.

- Re-test the same prompts and log the resolution.

Who owns LLM optimisation and how it scales smoothly

- CMO and CPO share the scoreboard. Answer presence, accuracy, and pipeline impact appear in the weekly business review.

- Content and product documentation run from a single backlog with one intake form. Each item has an owner, a reviewer, a target question, and a proof.

- Legal review is a fast lane with templates for claims, proof formats, and red lines.

- Data and analytics own the observability layer. They maintain the dashboard for presence, accuracy, latency, and coverage.

- Sales enablement uses the same canonical answers in talk tracks, proposals, and RFP libraries.

- Support and success contribute new questions and validate adoption content.

Tech Stack to Equip Your Team for LLM Optimisation

- Crawler and answer presence tracking. Discover where your brand appears inside assistants and summaries and how often.

- Fact store and citation generator. Centralise claims with links to proofs and export snippet-ready citations.

- Schema and glossary management. Validate structured data at scale and keep a single dictionary of terms.

- Annotation and evaluation workflow. Tag questions, mark accuracy, add remediation tasks, and track cycle time to fix.

- Optional retrieval sandbox. Test how your content is retrieved and quoted by an RAG pipeline before it reaches assistants.

- Optional prompt and policy vault. Store approved prompts, guardrails, and response templates for internal copilots.

The rule is simple. Every tool must either increase answer presence, increase accuracy, or reduce time to fix. Anything else is noise.

Why brand matters more than ever in the age of LLM answers

- Signals that travel. Analyst coverage, credible awards, integrations, and high-quality partner listings are machine visible and human persuasive.

- Clear point of view. Executives who publish specific, testable opinions on owned channels create anchor phrases that assistants reuse.

- Consistency across the stack. Sales sheets, docs, support macros, and web copy must share the same numbers and wording. Inconsistency invites misquotes.

What executives will see in six months if we do this right

- Higher presence across priority questions. Expect a visible rise in answer presence rate and citation share for your brand.

- Faster qualified inbound and shorter cycles. Expect better stage conversion and fewer clarification emails because canonical answers travel with the buyer.

- Fewer inaccurate answers. Accuracy scores rise while misquote incidents drop due to structured claims and proofs.

- Lower content waste. Fewer generic long-form posts. More atomic facts that power both public assistants and internal copilots.

- Clearer ROI. Link improvements in presence and accuracy with changes in pipeline velocity and cost to acquire.

Have a look at this chart to explain the directional impact by stage. It shows typical conversion lifts and time reductions when assistants use your canonical answers and proofs.

Your first move in the next ten days

- Approve a ninety-day pilot with one accountable owner.

- Finalise the top one hundred questions by stage and segment.

- Publish ten source of truth answers with proofs and schema.

- Launch a glossary and a calculator that completes a core task.

- Deploy an assistant visibility dashboard that tracks presence, accuracy, latency, and coverage.

- Run a red-team review to identify and fix misquotes.

- Brief sales and support on the new canonical answers and update proposal templates.

- Schedule weekly reviews with CMO and CPO to remove blockers.

- Lock the scale plan and budget for quarter two based on week twelve results.

Implementation checklists

- Question and intent

- Canonical answer under 120 words

- Proof link and file

- Structured data type used

- Owner and reviewer

- Last updated date and next review date

- Change log link

- Distribution targets and version ID

Accuracy audit template

- Question

- Assistant used and prompt

- Presence status

- Citation status

- Accuracy score and notes

- Fix required and owner

- Date fixed and retest result

Distribution map

- Owned surfaces. Docs, FAQ, glossary, calculators, change logs, trust centre.

- Partner surfaces. Marketplaces, app stores, integration hubs, implementation partners.

- Public repositories. Data portals and research hubs where structured tables can live.

- Community and A. Moderated threads that link back to canonical answers.

Building Long-Term Advantage in the Age of AI Answers

Discovery has always rewarded clarity and authority. The interface has changed over time, but the rules have not. If you make your facts obvious, verifiable, and easy to retrieve, assistants will carry your message into the first answer.

That lowers friction for buyers and costs for you. The LLM Optimisation program in this report is the shortest route to that outcome.

How Large Language Models Rank and Reference Brands?