How Modern Search Engines Rank Content: Cracking RRF, Embeddings & Semantic Scoring

Cracking Modern Search Ranking: Understanding RRF, Embeddings & Semantic Scoring

Modern search has evolved far beyond matching strings of text. 15% of the searches processed by Google every day have never been seen before. This constant stream of novel queries means that search engines can no longer rely solely on keyword matching.

As Google’s search team explained, they’ve built ways to return results for queries they can’t anticipate. For marketers, product owners and content strategists, this shift means that old‑school SEO tactics, which include stuffing articles with the exact phrase, are no longer sufficient. Instead, we need to understand how search engines interpret meaning, combine different retrieval methods and then re‑rank results based on semantics.

This blog breaks down how modern search engines work by breaking down three core pillars:

- Vector embeddings,

- Reciprocal rank fusion (RRF)

- Semantic scoring

By the end of this playbook blog, you’ll know why your perfectly optimised page might still be outranked and what you can do about it.

Why understanding ranking mechanics matters

Traditional search engines used keyword‑centric algorithms like BM25. BM25 is an evolution of TF‑IDF that scores documents based on term frequency and inverse document frequency. Documents containing many instances of the search, especially when that term is rare in the broader index, receive higher scores.

This method powered search for decades, but it has limitations: it can miss relevant pages that use synonyms or contextually related phrases, and it treats a query like a bag of words without understanding the relationships between them.

Today’s search engines combine lexical search (BM25 or similar term‑frequency methods) with vector or semantic search, and then apply a final re‑ranking.

This multi‑stage process means that your content must perform well on multiple fronts: textual relevance, semantic relatedness and overall intent satisfaction.

Understanding how those layers work helps you create content that surfaces consistently in all three stages.

The query journey - From keywords to concepts

When a user types a query, the engine performs multiple retrieval steps:

- Lexical retrieval with BM25. The engine identifies documents containing the search terms and scores them based on term frequency and document rarity.

- Vector retrieval. The same query is converted into a high‑dimensional vector, and the engine retrieves documents whose embeddings are close to that vector. Embeddings are numerical representations of words and sentences that capture their meaning. In a vector space, concepts with similar meanings lie close together, so semantically related content can be found even when there are no exact keyword overlaps.

- Result fusion (RRF). The top documents from lexical and vector search are merged using a reciprocal rank fusion method that prioritises documents appearing consistently high across both lists. RRF’s rank‑based aggregation avoids problems with score normalisation, making hybrid search stable even when the underlying scoring distributions differ.

- Semantic scoring (re‑ranking). The final exam. Deep learning models, such as BERT, evaluate the semantic similarity of each candidate document to the query.

- Azure’s semantic ranker, for example, uses multilingual language models adapted from Bing to apply a secondary ranking over an initial BM25 or RRF result.

- It generates captions summarising why each result is relevant and assigns a reranker score from 4 (highly appropriate) down to 0 (irrelevant).

The entire process happens in milliseconds, but understanding each step helps us design content that scores well across the pipeline.

Pillar 1: Embeddings - The language of AI

An embedding is a dense vector that encodes the meaning of a word, phrase or document. Machines cannot understand human language directly, so each term is converted into numbers in a high‑dimensional space.

In that “semantic space,” similar concepts cluster together. For example, the vectors for king and queen are much closer to each other than either is to banana. In fact, transformations like “king-man + woman ≈ queen” illustrate how vector arithmetic captures relationships.

Embeddings allow search engines to retrieve semantically related content even when the query uses different words.

Imagine someone searching for “man’s best friend” and landing on a page about dogs; the phrase “man’s best friend” shares little lexical overlap with dog, but in vector space, they reside in the same neighbourhood.

Dense vs. sparse embeddings

Earlier search systems relied on sparse vector lists that marked the presence or absence of each term. Dense embeddings, by contrast, are compact arrays of real numbers that capture nuanced relationships between entities. Dense embeddings excel at understanding context and synonyms, whereas sparse vectors are limited to exact keyword matches.

Search engines use cosine similarity to compare embedding vectors. Cosine similarity measures the angle between vectors; the smaller the angle, the more similar the texts. BERT and newer models produce bidirectional embeddings that consider the full context of each word, not just its immediate neighbours.

This contextual understanding allows search engines to interpret conversational queries and understand prepositions (for example, “flights to New York” vs. “flights from New York”).

Actionable steps for marketers

- Write for concepts, not just keywords. Brainstorm related entities, synonyms and phrases that describe the same idea and incorporate them naturally. Tools like AlsoAsked, ScaleNut or Thru can help you identify semantic clusters to cover.

- Create topic clusters. Group related articles around a central theme and interlink them. Search engines reward pages that comprehensively cover a topic across different intents: informational, navigational, and transactional.

- Use multimedia and structured data. Rich content like videos, step‑by‑step tutorials and FAQ schemas provides multiple contexts for embeddings to latch onto. Structured data helps search engines understand entities and relationships explicitly.

Pillar 2: Reciprocal Rank Fusion (RRF) -

Fair hybrid ranking

When a query is executed, lexical and vector search return separate ranked lists. Combining them naïvely (for example, by taking an arithmetic mean of scores) can cause instability because lexical and semantic scores lie on different scales.

Reciprocal rank fusion solves this by aggregating on rank position rather than raw scores. RRF assigns each document a score based on the reciprocal of its rank in each list and sums these reciprocals.

Documents that consistently rank near the top across methods receive higher combined scores, while outliers have less influence. This produces stable rankings even when score distributions vary significantly.

Why RRF matters

- Stability across varied score distributions. Because RRF relies on rank positions, it avoids the distortion caused by different score scales or outliers.

- Resistance to outliers and noisy data. Extreme scores in one list cannot dominate the final ranking.

- Reward for consistent relevance. Items that appear near the top in both the lexical and vector lists are favoured, which aligns to find content that is both keyword‑relevant and semantically appropriate.

Illustration to help you understand RRF

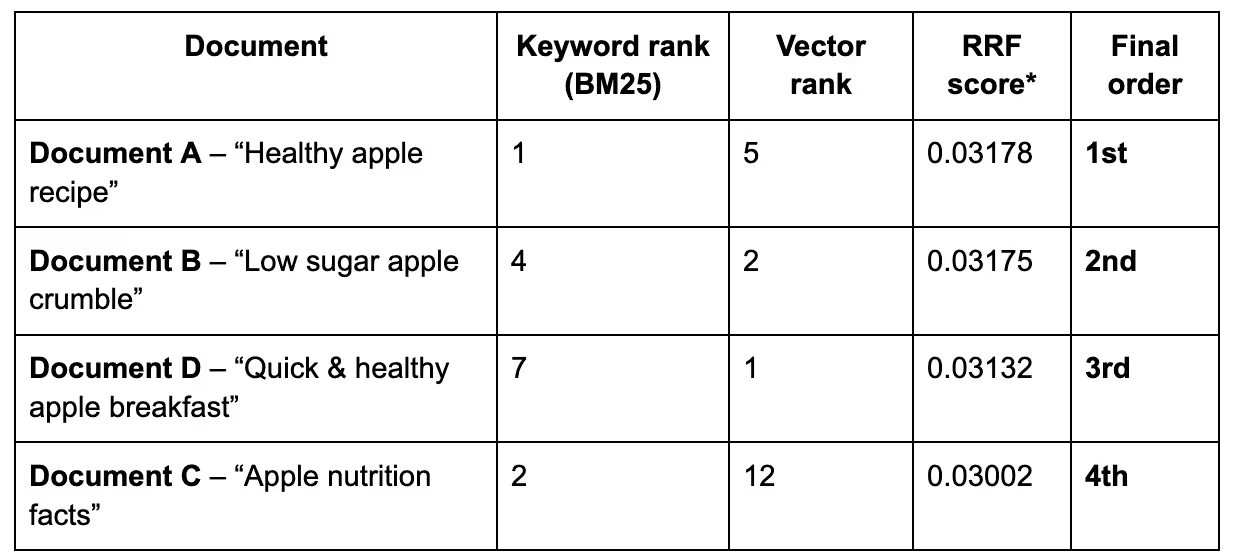

To illustrate RRF, the Marketing Stack team tested four hypothetical documents for the query “healthy apple recipes.” The ranks below come from two methods: BM25 (keyword rank) and vector search (embedding rank). A constant k of 60 was used in the RRF formula.

*RRF score = 1/(k + keyword rank) + 1/(k + vector rank); higher scores indicate stronger consensus.

Although Document C has the second‑best keyword rank, its poor vector ranking pushes it to the bottom. Document D, despite weaker keyword optimisation, jumps ahead because its semantic similarity score is excellent. This illustrates that simply repeating keywords is not enough; your content must also align with the query’s intent.

How to align with RRF

- Balance keyword and semantic optimisation. Craft titles and headings that include essential terms, but ensure the body content addresses the underlying question comprehensively.

- Use signals beyond the page. External indicators such as user engagement, click‑through rate and backlink diversity influence ranking during the RRF phase. Invest in building authority across channels so your content appears in both lists.

- Monitor outlier pages. Pages ranking highly through one method but poorly through the other may need deeper content or improved technical optimisation.

Pillar 3: Semantic scoring - the last lap

Once RRF has produced a consolidated list, search engines perform a deep semantic evaluation. Azure’s semantic ranker, for example, applies multilingual deep learning models to re‑rank an initial BM25‑ or RRF‑ranked set. It then performs three key actions:

- Contextual scoring. Models compute a new relevance score based on the semantic meaning of each document relative to the query. Because the models understand context (e.g., the multiple meanings of “capital”), they promote results that fit the intended sense.

- Semantic captions and answers. The ranker extracts verbatim sentences that summarise why a document is relevant and can optionally provide direct answers. These captions power rich SERP snippets and answer boxes.

- Query rewriting. To broaden coverage, the system can generate up to ten semantically similar query variants. Results from these expanded queries are merged and rescored.

Each document receives a reranker score on a four‑point scale, where 4 indicates a highly complete answer and 0 means irrelevant. Only the top 50 results from the previous stage are evaluated, so gaining entry into this final exam requires strong performance earlier in the pipeline.

Optimising for semantic scoring

- Answer the question fully. Write content that comprehensively addresses the query, not just partially. Incomplete answers may receive a reranker score of 2 or 3, while complete answers score 4.

- Use natural language and context. Search models favour conversational phrasing and clear explanation. Avoid jargon where possible and provide definitions for industry terms.

- Include semantically rich headings and captions. Break your content into sections with descriptive subheadings to make it easier for summarisation models to extract key sentences.

- Implement structured data. Schema.org markup helps search engines understand the page’s entities, relationships and question‑answer structure, increasing the likelihood of appearing in rich results.

Adopt latest SEO strategies

To align with how search engines actually work in 2025, marketers should adopt the following strategies:

- Think in topics, not individual keywords. Build comprehensive content hubs that cover a subject from multiple angles. Use entity research tools to identify related concepts and questions.

- Create clear intent pathways. Map content to the stages of the buyer journey: informational, consideration, and transactional. Each stage should address the user’s likely questions and provide next steps.

- Optimise for both humans and machines. Write engaging prose with natural language for readers, but structure it in a way that machines can understand: use headings, bullet lists and schema markup.

- Measure beyond rankings. Track user engagement, dwell time and conversion metrics to understand whether your content is truly satisfying intent. Search engines increasingly use these implicit signals to adjust rankings.

- Leverage newer tools. Experiment with semantic SEO optimisation platforms (Surfer SEO, NeuronWriter, RankMath) that analyse competitor pages and suggest semantic entities to include. Combine this with content generation and editing to ensure quality.

Practices to avoid while improving search ranking

Avoiding outdated practices is just as important as adopting new ones. Here are some traps to steer clear of:

- Obsession with keyword density. Repeating the same phrase twenty times may not help; it can harm readability and performance. Focus on comprehensive coverage instead.

- Creating thin content. Pages that provide minimal value or merely repeat definitions without offering deep insight are unlikely to pass the semantic scoring stage.

- Ignoring data structure. Unstructured walls of text make it harder for models to extract relevant information. Break up content into digestible sections and use lists, tables (for numbers or names) and schema markup.

- Overlooking user intent. If your page addresses only one of the many intents behind a query, the reranker will recognise that you haven’t answered the full question.

What did we understand about the modern search ranking

Modern search ranking is a multi‑stage process combining lexical retrieval, vector embeddings, rank‑based fusion and deep semantic scoring. Learning to speak the language of search means moving beyond keyword stuffing and embracing concepts, context and user intent.

Keep these tips in mind:

- Embeddings unlock meaning. Dense vector representations allow engines to understand synonyms, relationships and context. Use varied language and related entities.

- RRF rewards consistency. Documents that appear highly in both lexical and semantic lists rise to the top while outliers are neutralised.

- Semantic scoring is the final hurdle. Deep models evaluate whether your content truly answers the query and will demote pages that only partially satisfy the intent.

By adopting a topic‑centric, user‑focused and well‑structured content strategy, you can ensure your work stands out in a search environment where novelty and meaning are the new currency.